[ad_1]

AI instruments ought to, ideally, prioritize human well-being, company and fairness, steering away from dangerous penalties.

Throughout numerous industries, AI is instrumental in fixing many difficult issues, equivalent to enhancing tumor assessments in most cancers therapy or using pure language processing in banking for customer-centric transformation. The applying of AI can also be gaining traction in oncologic care by utilizing laptop imaginative and prescient and predictive analytics to raised determine most cancers sufferers who’re candidates for lifesaving surgical procedure.

Regardless of good intentions, the shortage of internality throughout AI improvement and use can result in outcomes that don’t prioritize folks.

To handle this, let’s focus on three approaches to AI methods that may assist prioritize the folks: human company as a precedence, being proactive to find potential for inequities, and selling knowledge literacy for all.

1. Human company as a precedence

As using AI methods turns into ubiquitous and begins to impression our lives considerably, it can be crucial that we hold people on the heart. At SAS, we outline human company in AI because the essential means of people to keep up management over the design, improvement, deployment, and use of AI methods that have an effect on their lives.

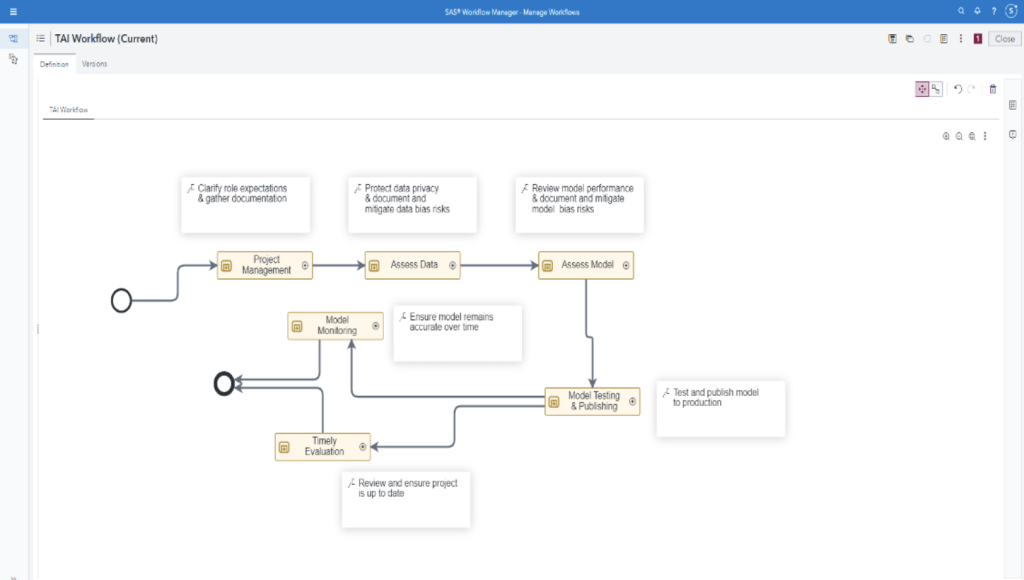

Making certain human company in AI includes adopting numerous methods. One notable strategy is to require human participation and approval of sure duties inside the AI and analytics workflow. That is generally known as the human-in-the-loop strategy. This methodology promotes human oversight and company within the evaluation workflow, thus creating collaboration between people and AI.

2. Proactiveness to find potential for inequities

When striving for reliable AI, a human-centric strategy ought to prolong its focus to embody a various and inclusive inhabitants. At SAS we advocate for a focus on weak populations, recognizing that safeguarding probably the most weak advantages society as an entire. This human-centric philosophy prompts a vital set of questions: “For what objective?” and “For whom may this fail?” These questions assist builders, deployers and customers of AI methods consider who could also be harmed utilizing AI options.

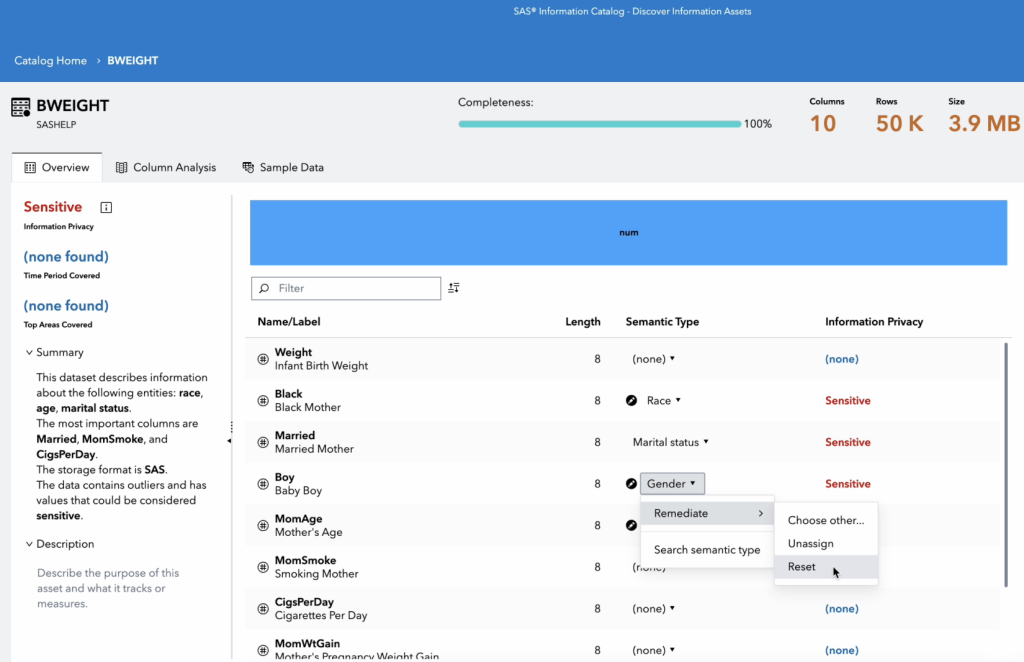

Understanding the AI system’s objective also can make clear the goal populations. This readability may also help lower a few of the bias by ensuring that the AI methods are constructed on knowledge consultant of the inhabitants. Automated knowledge exploration turns into a vital device on this context, because it assesses the distributions of variables in coaching knowledge and analyzes the relationships between enter and goal variables earlier than coaching fashions. This proactive knowledge exploration ensures that insights derived from the info replicate various populations and deal with the particular wants of weak teams. As soon as the mannequin is developed, it must be reviewed for potential variations in mannequin efficiency for various teams, notably inside specified delicate variables. Bias have to be assessed and reported concerning mannequin efficiency, accuracy and predictions. As soon as teams in danger are recognized, AI builders can take focused steps to foster equity.

Fig 3: Proactively perceive potential variations in mannequin efficiency throughout totally different populations utilizing SAS Mannequin Studio’s Equity and Bias evaluation tab.

Fig 4: SAS® Mannequin Studio’s automated knowledge exploration provides a consultant snapshot of your knowledge.

3. Information literacy for all

Lastly, human centricity in AI additionally underscores people’ company in understanding, influencing and even controlling how AI methods impression their lives. This degree of company depends closely on the idea of information literacy, as outlined by Gartner. Information literacy is the flexibility to learn, write and talk knowledge in context, together with understanding knowledge sources and constructs, analytical strategies and methods utilized, and the flexibility to explain the use case, utility and ensuing worth. As AI is being more and more used to make choices that have an effect on our lives, it turns into important that people develop knowledge literacy. Being knowledge literate not solely at an organizational degree however a person degree helps us perceive how knowledge is used to make choices for us, and the way can one defend themselves from knowledge privateness dangers and maintain AI methods accountable,

Study extra about SAS’ free knowledge literacy course

In prioritizing human company, proactive fairness measures and knowledge literacy, a human-centric strategy ensures accountable AI integration. This alignment with human values enhances lives, minimizing dangers and biases in AI implementation. It will in the end create a future the place expertise serves humanity.

Learn extra tales about knowledge ethics and reliable AI on this collection

Vrushali Sawant and Kristi Boyd contributed to this text.

[ad_2]

Source link