[ad_1]

Introduction

A aim of supervised studying is to construct a mannequin that performs effectively on a set of recent knowledge. The issue is that you could be not have new knowledge, however you possibly can nonetheless expertise this with a process like train-test-validation break up.

Isn’t it attention-grabbing to see how your mannequin performs on an information set? It’s! Probably the greatest facets of working dedicatedly is seeing your efforts being utilized in a well-formed solution to create an environment friendly machine-learning mannequin and generate efficient outcomes.

What’s the Practice Check Validation Cut up?

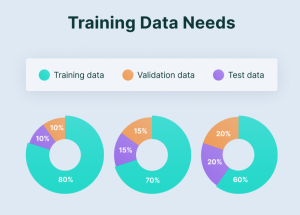

The train-test-validation break up is prime in machine studying and knowledge evaluation, significantly throughout mannequin growth. It includes dividing a dataset into three subsets: coaching, testing, and validation. Practice take a look at break up is a mannequin validation course of that lets you test how your mannequin would carry out with a brand new knowledge set.

The train-test-validation break up helps assess how effectively a machine studying mannequin will generalize to new, unseen knowledge. It additionally prevents overfitting, the place a mannequin performs effectively on the coaching knowledge however fails to generalize to new situations. Through the use of a validation set, practitioners can iteratively regulate the mannequin’s parameters to attain higher efficiency on unseen knowledge.

Significance of Information Splitting in Machine Studying

Information splitting includes dividing a dataset into coaching, validation, and testing subsets. The significance of Information Splitting in Machine Studying covers the next facets:

Coaching, Validation, and Testing

Information splitting divides a dataset into three principal subsets: the coaching set, used to coach the mannequin; the validation set, used to trace mannequin parameters and keep away from overfitting; and the testing set, used for checking the mannequin’s efficiency on new knowledge. Every subset serves a singular function within the iterative strategy of creating a machine-learning mannequin.

Mannequin Growth and Tuning

Through the mannequin growth part, the coaching set is critical for exposing the algorithm to varied patterns inside the knowledge. The mannequin learns from this subset, adjusting its parameters to reduce errors. The validation set is necessary throughout hyperparameter monitoring, serving to to optimize the mannequin’s configuration.

Overfitting Prevention

Overfitting happens when a mannequin learns the coaching knowledge effectively, capturing noise and irrelevant patterns. The validation set acts as a checkpoint, permitting for the detection of overfitting. By evaluating the mannequin’s efficiency on a unique dataset, you possibly can regulate mannequin complexity, strategies, or different hyperparameters to forestall overfitting and improve generalization.

Efficiency Analysis

The testing set is crucial to a machine studying mannequin’s efficiency. After coaching and validation, the mannequin faces the testing set, which checks real-world eventualities. A well-performing mannequin on the testing set signifies that it has efficiently tailored to new, unseen knowledge. This step is necessary for gaining confidence in deploying the mannequin for real-world functions.

Bias and Variance Evaluation

Practice Check Validation Cut up helps in understanding the bias trade-off. The coaching set gives details about the mannequin’s bias, capturing inherent patterns, whereas the validation and testing units assist assess variance, indicating the mannequin’s sensitivity to fluctuations within the dataset. Hanging the correct steadiness between bias and variance is significant for attaining a mannequin that generalizes effectively throughout totally different datasets.

Cross-Validation for Robustness

Past a easy train-validation-test break up, strategies like k-fold cross-validation additional improve the robustness of fashions. Cross-validation includes dividing the dataset into okay subsets, coaching the mannequin on k-1 subsets, and validating the remaining one. This course of is repeated okay instances, and the outcomes are averaged. Cross-validation gives a extra complete understanding of a mannequin’s efficiency throughout totally different subsets of the info.

Significance of Information Splitting in Mannequin Efficiency

The significance of Information splitting in mannequin efficiency serves the next functions:

Analysis of Mannequin Generalization

Fashions shouldn’t solely memorize the coaching knowledge but additionally generalize effectively. Information splitting permits for making a testing set, offering real-world checks for checking how effectively a mannequin performs on new knowledge. With no devoted testing set, the chance of overfitting will increase when a mannequin adapts too carefully to the coaching knowledge. Information splitting mitigates this threat by evaluating a mannequin’s true generalization capabilities.

Prevention of Overfitting

Overfitting happens when a mannequin turns into extra complicated and captures noise or particular patterns from the coaching knowledge, decreasing its generalization capability.

Optimization of Mannequin Hyperparameters Monitoring a mannequin includes adjusting hyperparameters to attain efficiency. This course of requires iterative changes based mostly on mannequin conduct, finished by a separate validation set.

Power Evaluation

A sturdy mannequin ought to carry out constantly throughout totally different datasets and eventualities. Information splitting, significantly k-fold cross-validation, helps assess a mannequin’s robustness. By coaching and validating on totally different subsets, you possibly can acquire insights into how effectively a mannequin generalizes to numerous knowledge distributions.

Bias-Variance Commerce-off Administration

Hanging a steadiness between bias and variance is essential for creating fashions that don’t overfit the info. Information splitting permits the analysis of a mannequin’s bias on the coaching set and its variance on the validation or testing set. This understanding is crucial for optimizing mannequin complexity.

Understanding the Information Cut up: Practice, Check, Validation

For coaching and testing functions of a mannequin, the knowledge must be damaged down into three totally different datasets :

The Coaching Set

It’s the knowledge set used to coach and make the mannequin be taught the hidden options within the knowledge. The coaching set ought to have totally different inputs in order that the mannequin is skilled in all situations and might predict any knowledge pattern that will seem sooner or later.

The Validation Set

The validation set is a set of knowledge that’s used to validate mannequin efficiency throughout coaching.

This validation course of provides info that helps in tuning the mannequin’s configurations. After each epoch, the mannequin is skilled on the coaching set, and the mannequin analysis is carried out on the validation set.

The primary concept of splitting the dataset right into a validation set is to forestall the mannequin from changing into good at classifying the samples within the coaching set however not with the ability to generalize and make correct classifications on the info it has not seen earlier than.

The Check Set

The take a look at set is a set of knowledge used to check the mannequin after finishing the coaching. It gives a ultimate mannequin efficiency when it comes to accuracy and precision.

Information Preprocessing and Cleansing

Information preprocessing includes the transformation of the uncooked dataset into an comprehensible format. Preprocessing knowledge is a vital stage in knowledge mining that helps enhance knowledge effectivity.

Randomization in Information Splitting

Randomization is crucial in machine studying, guaranteeing unbiased coaching, validation, and testing subsets. Randomly shuffling the dataset earlier than partitioning minimizes the chance of introducing patterns particular to the info order. This prevents fashions from studying noisy knowledge based mostly on the association. Randomization enhances the generalization capability of fashions, making them sturdy throughout varied knowledge distributions. It additionally protects in opposition to potential biases, guaranteeing that every subset displays the variety current within the total dataset.

Practice-Check Cut up: How To

To carry out a train-test break up, use libraries like scikit-learn in Python. Import the `train_test_split` operate, specify the dataset, and set the take a look at measurement (e.g., 20%). This operate randomly divides the info into coaching and testing units, preserving the distribution of lessons or outcomes.

Python code for Practice Check Cut up:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

#import csv

Validation Cut up: How To

After the train-test break up, additional partition the coaching set for a validation break up. That is essential for mannequin tuning. Once more, use `train_test_split` on the coaching knowledge, allocating a portion (e.g., 15%) because the validation set. This aids in refining the mannequin’s parameters with out touching the untouched take a look at set.

Python Code for Validation Cut up

from sklearn.model_selection import train_test_split

X_train_temp, X_temp, y_train_temp, y_temp = train_test_split(X, y, test_size=0.3, random_state=42)

X_val, X_test, y_val, y_test = train_test_split(X_temp, y_temp, test_size=0.5, random_state=42)

#import csv

Practice Check Cut up for Classification

In classification, the info is break up into two elements: coaching and testing units. The mannequin is skilled on a coaching set, and its efficiency is examined on a testing set. The coaching set incorporates 80% of the info, whereas the take a look at set incorporates 20%.

Actual Information Instance:

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_trivia

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

iris = load_trivia()

X = trivia.knowledge

y = trivia.goal

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

mannequin = LogisticRegression()

mannequin.match(X_train, y_train)

y_pred = mannequin.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f”Accuracy: {accuracy}”)

#import csv

Output

Accuracy: 1.0

Practice Check Regression

Divide the regression knowledge units into coaching and testing knowledge units. Practice the mannequin based mostly on coaching knowledge, and the efficiency is evaluated based mostly on testing knowledge. The primary goal is to see how effectively the mannequin generalizes to the brand new knowledge set.

from sklearn.model_selection import train_test_split

from sklearn.datasets import load_boston

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

boston = load_boston()

X = boston.knowledge

y = boston.goal

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

mannequin = LinearRegression()

mannequin.match(X_train, y_train)

y_pred = mannequin.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print(f”Imply Squared Error: {mse}”)

#import csv

Imply Squared Error: 24.291119474973616

Greatest Practices in Information Splitting

Randomization: Randomly shuffle knowledge earlier than splitting to keep away from order-related biases.

Stratification: Preserve class distribution in every break up, important for classification duties.

Cross-Validation: Make use of k-fold cross-validation for sturdy mannequin evaluation, particularly in smaller datasets.

Frequent Errors to Keep away from

The frequent errors to keep away from whereas performing a Practice-Check-Validation Cut up are:

Information Leakage: Guarantee no info from the take a look at set influences the coaching or validation.

Ignoring Class Imbalance: Tackle class imbalances by stratifying splits for higher mannequin coaching

Overlooking Cross-Validation: Relying solely on a single train-test break up might bias mannequin analysis.

Conclusion

Practice-Check-Validation Cut up is a vital take a look at for testing the effectivity of a machine studying mannequin. It evaluates totally different units of knowledge to test the accuracy of the machine studying mannequin, therefore serving as a vital device within the technological sphere.

Key Takeaways

Strategic Information Division:

Study the significance of dividing knowledge into coaching, testing, and validation units for efficient mannequin growth.

Perceive every subset’s particular roles in stopping overfitting and optimizing mannequin efficiency.

Sensible Implementation:

Purchase the abilities to implement train-test-validation splits utilizing Python libraries.

Comprehend the importance of randomization and stratification for unbiased and dependable mannequin analysis.

Guarding Towards Frequent Errors:

Achieve insights into frequent pitfalls throughout knowledge splitting, equivalent to leakage and sophistication imbalance.

Position of cross-validation in guaranteeing the mannequin’s robustness and generalization throughout numerous datasets.

Associated

[ad_2]

Source link