[ad_1]

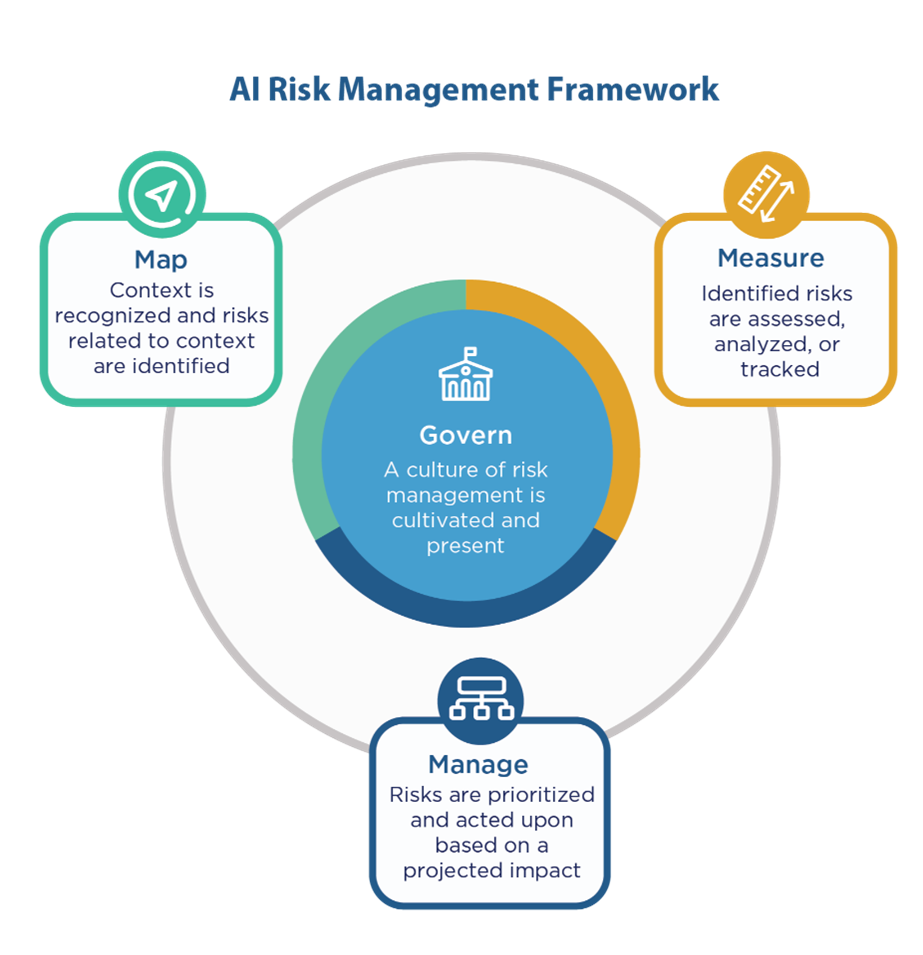

The Nationwide Institute of Requirements and Know-how (NIST) has launched a set of requirements and finest practices inside their AI Threat Administration Framework for constructing accountable AI programs.

NIST sits underneath the U.S. Division of Commerce and their mission is to advertise innovation and industrial competitiveness. NIST provides a portfolio of measurements, requirements, and authorized metrology to supply suggestions that guarantee traceability, allow high quality assurance, and harmonize documentary requirements and regulatory practices. Whereas these requirements usually are not obligatory or obligatory, they’re designed to extend the trustworthiness of AI programs. This framework may be very detailed with suggestions throughout 4 capabilities: govern, map, measure, and handle. This weblog will give attention to a number of of those suggestions and the place they match inside a mannequin’s lifecycle.

Accountability constructions are in place in order that the suitable groups and people are empowered, accountable, and skilled for mapping, measuring and managing AI dangers. – Govern 2

Analytics is a workforce sport with knowledge scientists, IT/engineering, danger analysts, knowledge engineers, enterprise representatives and different teams all coming collectively to construct an AI system. Thus, to make sure challenge success, the roles and duties for this challenge needs to be outlined and understood by all stakeholders. A transparent definition of roles and duties prevents duplication of efforts, ensures all duties are accounted for and permits for a streamlined course of.

Context is established and understood. AI capabilities, focused utilization, objectives and anticipated advantages and prices in contrast with applicable benchmarks. Dangers and advantages are mapped for all elements of the AI system together with third-party software program and knowledge. – Maps 1, 3 and 4

AI tasks needs to be well-defined and documented to stop future information gaps and guarantee choice transparency. The proposed mannequin utilization, the tip customers, the anticipated efficiency of the AI system, methods for resolving points, potential unfavorable impacts, deployment methods, knowledge limitations, potential privateness considerations, testing methods and extra needs to be documented and saved in a shared location. Documenting the related data could improve preliminary challenge overhead; nonetheless, the advantages of a well-documented and understood mannequin outweigh the preliminary prices.

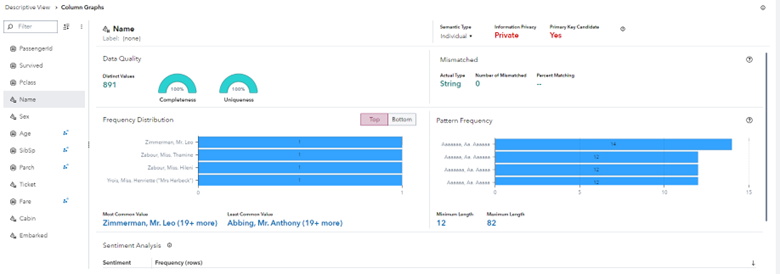

System necessities (e.g., “the system shall respect the privateness of its customers”) are elicited from and understood by related AI actors. Design choices take socio-technical implications under consideration to deal with AI dangers. – Map 1.6

Inside the coaching knowledge, organizations ought to be certain that personally identifiable data (PII) and different delicate knowledge are masked or eliminated when not required for modeling. Maintaining PII knowledge inside the coaching desk will increase the chance of personal data being leaked to dangerous actors. Eradicating PII and following the precept of least privilege additionally adhere to widespread knowledge and data safety finest practices.

Scientific integrity and TEVV issues are recognized and documented, together with these associated to experimental design, knowledge assortment and choice (e.g., availability, representativeness, suitability), system trustworthiness and assemble validation. – Map 2.3

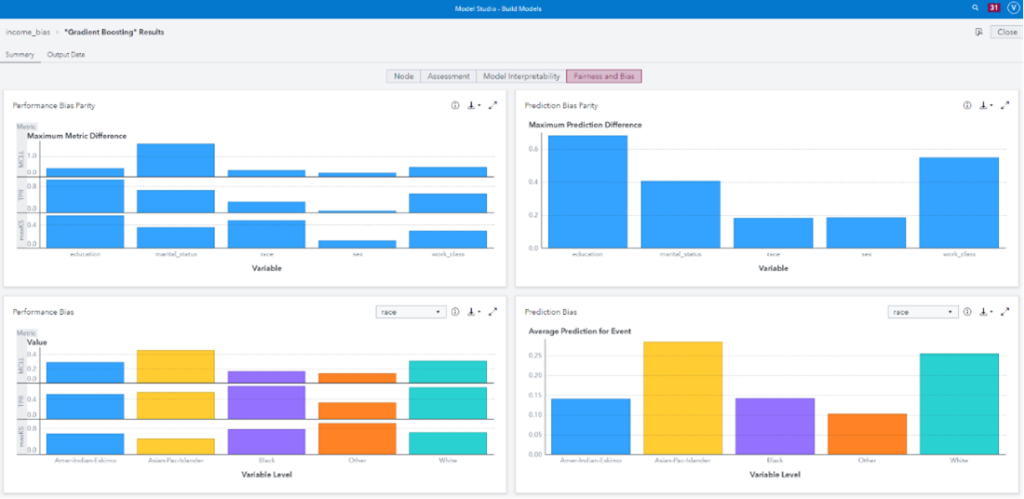

Organizations ought to evaluate how effectively the coaching knowledge represents the goal inhabitants. Utilizing coaching knowledge that’s not consultant of the goal inhabitants can create a mannequin that’s much less correct for particular teams, leading to undue hurt to these teams. It may well additionally create a mannequin that’s much less efficient total. Moreover, the supposed or unintended inclusion of protected class variables within the coaching knowledge can create a mannequin that treats teams otherwise. Even when protected class variables are faraway from the coaching knowledge, organizations should additionally test the info for proxy variables. Proxy variables are variables which have a excessive correlation with and are predictive of protected class variables. For instance, a well-documented proxy variable is the variety of previous pregnancies, which is very correlated with a person’s intercourse.

For some use instances, these protected class variables could also be vital predictors and will stay within the coaching knowledge. That is most typical in well being care. Use instances for predicting well being danger could embrace race and gender predictors as a result of signs could manifest otherwise throughout teams. For instance, the variations in coronary heart assault signs in women and men are effectively documented. For different use instances, equivalent to fashions aimed to foretell who ought to obtain a service or a profit, this will result in discrimination.

Evaluations involving human topics meet relevant necessities (together with human topic safety) and are consultant of the related inhabitants. – Measure 2.2

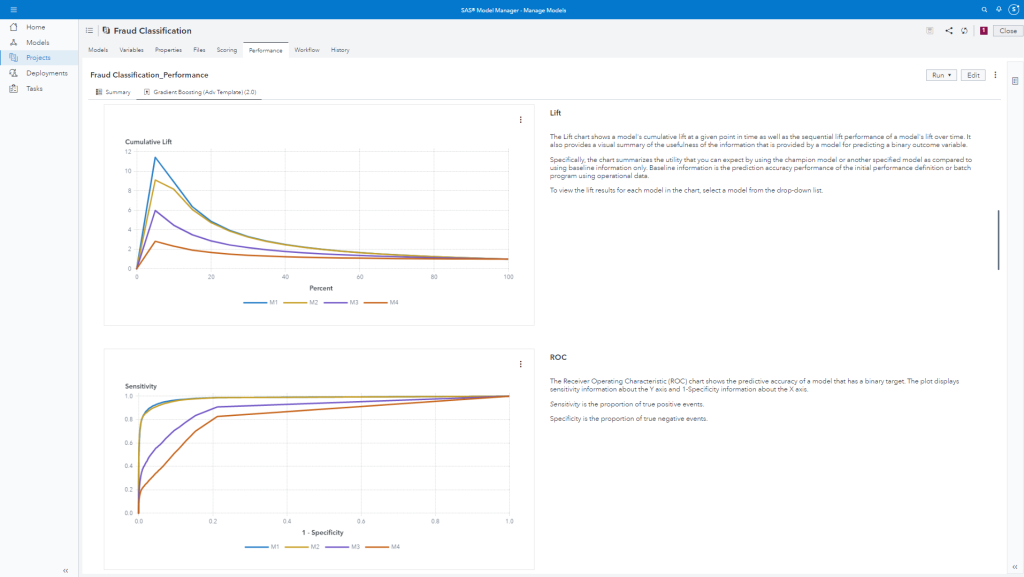

Mannequin efficiency supplies data on how precisely the mannequin can predict the specified occasion. This helps us perceive how helpful the mannequin could also be for our AI system. Past efficiency on the world degree, knowledge scientists want to know how effectively the mannequin performs throughout numerous teams and courses. A mannequin that performs worse on particular teams could level to an absence of illustration inside the coaching knowledge. Moreover, a mannequin that’s much less correct on particular teams could trigger hurt to these teams by making the incorrect choice utilizing that mannequin.

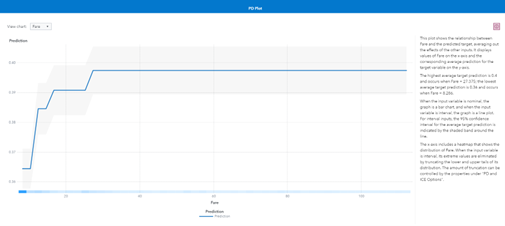

Lastly, mannequin explainability helps stakeholders perceive how the inputs to a mannequin have an effect on the mannequin’s prediction. By understanding this relationship, knowledge scientists and area specialists can decide if the mannequin captures well-known connections between elements. For instance, because the debt-to-income ratio will increase, a person’s chance of default also needs to improve. Moreover, explainability can present proof {that a} mannequin is honest by demonstrating {that a} mannequin is utilizing elements which can be unrelated to protected group standing.

The performance and conduct of the AI system and its elements – as recognized within the MAP perform – are monitored when in manufacturing. – Measure 2.4

All fashions decay, however fashions don’t decay on the identical price. Mannequin decay results in a lower in mannequin accuracy, so any choice being made utilizing that mannequin can be incorrect extra typically. After a mannequin is deployed into manufacturing, it needs to be monitored so organizations can perceive when it’s time to take motion to keep up their analytical fashions, whether or not or not it’s to retrain the mannequin, choose a brand new champion mannequin, finish the workflow, or retire the challenge.

Submit-deployment AI system monitoring plans are applied, together with mechanisms for capturing and evaluating enter from customers and different related AI actors, attraction and override, decommissioning, incident response, restoration, and alter administration. Measurable actions for continuous enhancements are built-in into AI system updates and embrace common engagement with events, together with related AI actors. – Mange 4.1 and 4.2

The NIST AI Threat Administration Framework recommends reviewing the challenge on a recurring foundation to validate that the challenge parameters are nonetheless legitimate. This supplies a daily audit of AI programs, permitting organizations to take away programs not in use. The profit to the group can’t be understated. Eradicating programs not in use each minimizes the chance of somebody leveraging a deprecated mannequin and might even assist save on infrastructure prices.

Adopting these suggestions could seem daunting, however SAS® Viya® makes it straightforward and it supplies the tooling required to map these suggestions to steps inside a workflow. To take it a step additional, SAS supplies a Reliable AI Lifecycle Workflow with these steps predefined. The purpose of the workflow is to make NIST’s suggestions straightforward to undertake for organizations.

Sophia Rowland, Kristi Boyd, and Vrushali Sawant contributed to this text.

[ad_2]

Source link