[ad_1]

Overview

On this weblog put up, we will probably be leverage Giant Language Fashions (LLM) by SAS Viya. Although LLMs are highly effective, evaluating their responses is normally a handbook course of. Thus, they’re typically vulnerable to misinterpretation and might current info that’s incorrect and probably nonsensical.

One technique that’s typically employed to make sure higher responses from an LLM is immediate engineering, which is the concept that crafting and structuring the preliminary enter, or immediate, could be finished intentionally to retrieve higher high quality responses. Whereas there are a lot of such methods to enhance LLM efficiency, immediate engineering stands out as it may be carried out solely by pure language with out requiring extra technical talent.

How can we be sure that the most effective prompts engineered could be successfully fed into an LLM? Then, how will we consider the LLM systematically to make sure that the prompts used result in the most effective outcomes?

To reply these questions, we’ll discover how we will use SAS Viya to determine a immediate catalog, to retailer and govern prompts, in addition to a immediate analysis framework to generate extra correct LLM responses.

Whereas this framework could be utilized to many various use circumstances, for demonstration functions, we’ll see how we will use LLMs to reply RFP questions and reap important time financial savings.

Leveraging LLMs

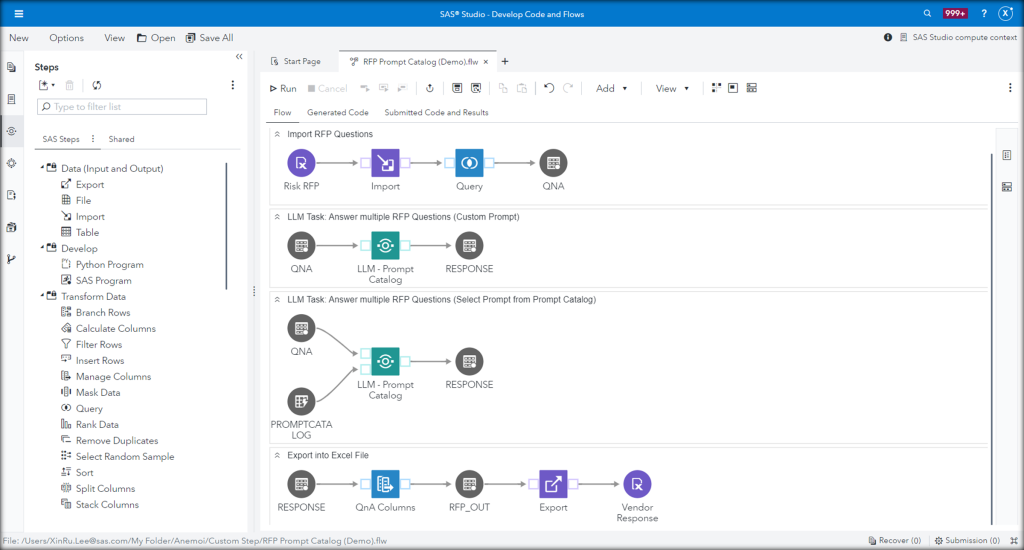

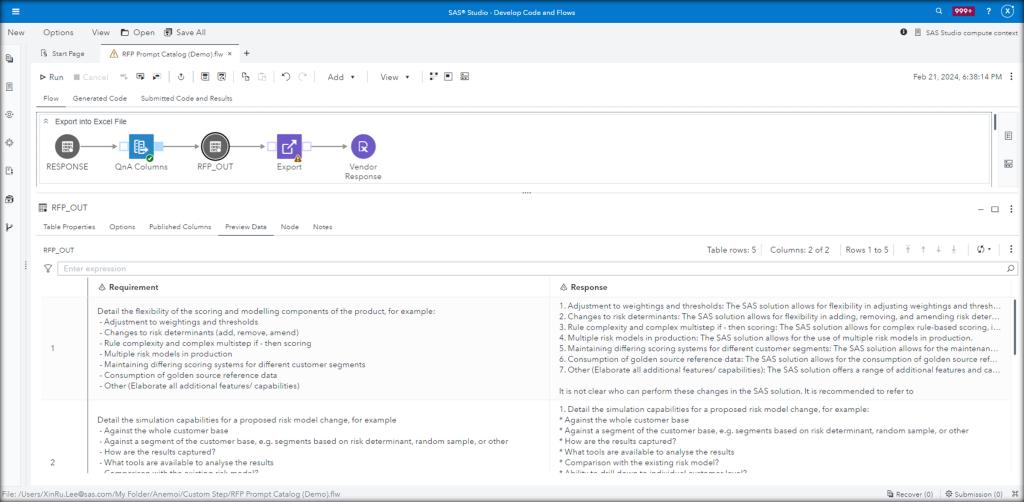

Now, let’s check out the method stream.

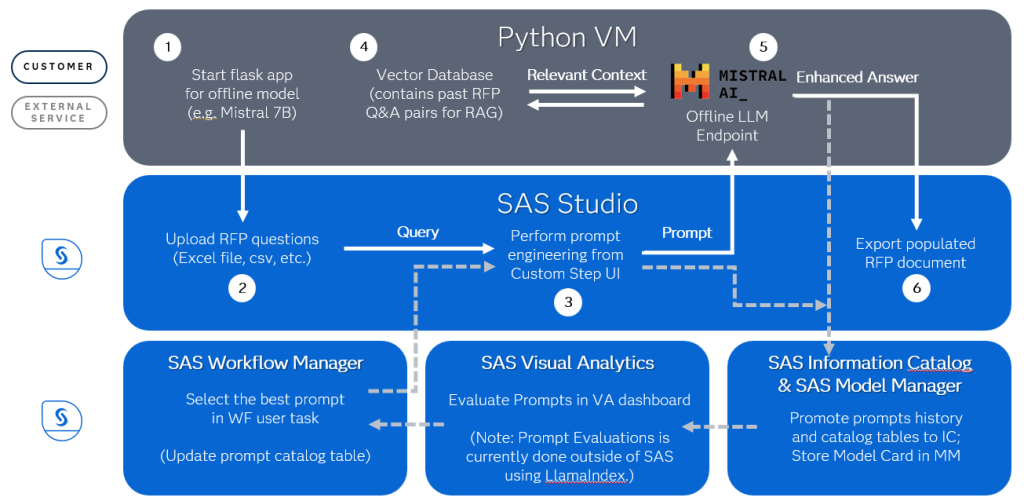

To make use of an offline LLM, like Mistral-7B, we first want to start out the Flask app in our Python VM.

We’ll log in to SAS Studio to add our RFP doc containing the questions. That is sometimes within the type of an Excel file.

Then, we carry out immediate engineering from the Customized Step UI.

The immediate will probably be submitted to the offline LLM endpoint, our Mistral mannequin, the place we will have a vector database storing beforehand accomplished RFP paperwork, or SAS documentation, weblog articles, and even tech help tracks, to offer related context to our question.

Because of this Retrieval Augmented Era (RAG) course of, we get an enhanced completion (reply) that will probably be returned to SAS Studio.

Right here, we will then export the response desk out as an Excel file.

The method repeats itself to generate a brand new set of responses. After a number of rounds of immediate engineering, we get a historical past of immediate and completion pairs. The query now could be how will we decide which is the most effective immediate for use sooner or later to make sure higher responses?

SAS Studio

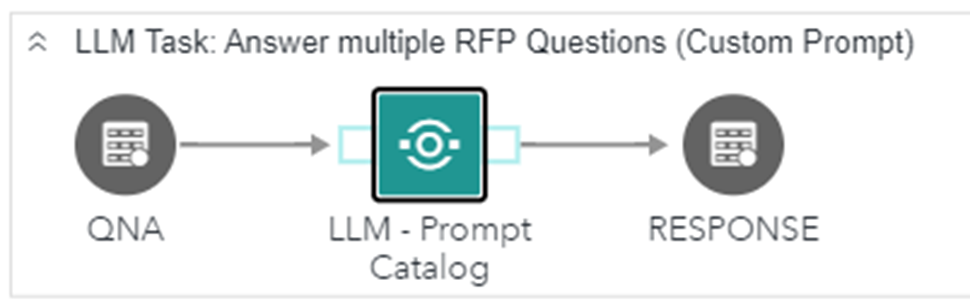

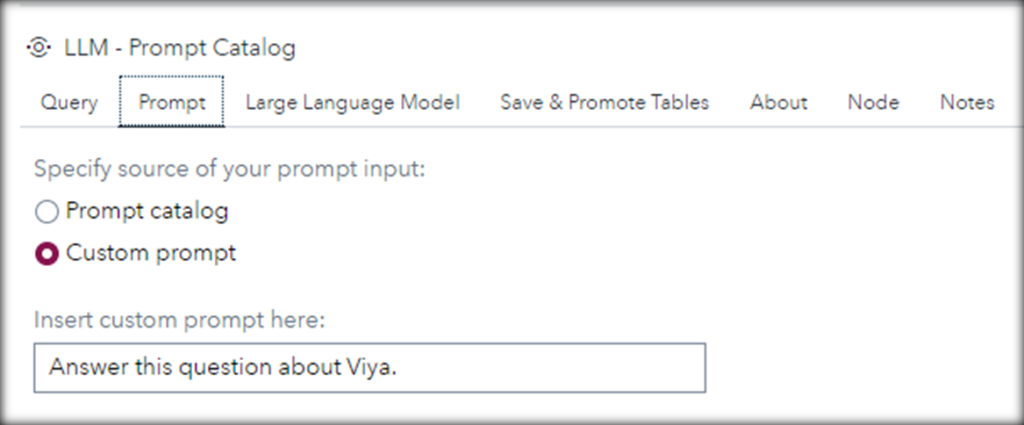

We’ll begin by importing the RFP doc into SAS. This QNA desk incorporates a number of inquiries to be answered by the LLM. Then, we’ll convey within the “LLM – Immediate Catalog” customized step the place we’ll feed these questions in as queries and likewise write our personal customized immediate, reminiscent of, “Reply this query about SAS Viya.”

Within the Giant Language Mannequin tab, we wish it to make use of the offline mannequin, which is a “Mistral-7b” mannequin on this case. We even have the choice to make use of the OpenAI API, however we’ll must insert our personal token right here.

Lastly, we wish to save these prompts and responses to the immediate historical past and immediate catalog tables, which we’ll use later throughout our immediate evaluations. We are able to additionally promote them in order that we will see and govern them in SAS Info Catalog.

Let’s run this step and focus on what is occurring behind the scenes:

What it is really doing is that it is making an API name to the LLM endpoint.

And due to the RAG course of that is occurring behind the scenes, this Mistral mannequin now has entry to our wider SAS information base reminiscent of well-answered RFPs and up-to-date SAS documentation.

So, we’ll get an enhanced reply.

Utilizing an offline mannequin like this, additionally signifies that we needn’t fear about delicate information getting leaked.

And simply to emphasise once more, this practice step can be utilized for some other use case, not only for RFP response era.

Now, how will we measure the efficiency of this immediate and mannequin mixture?

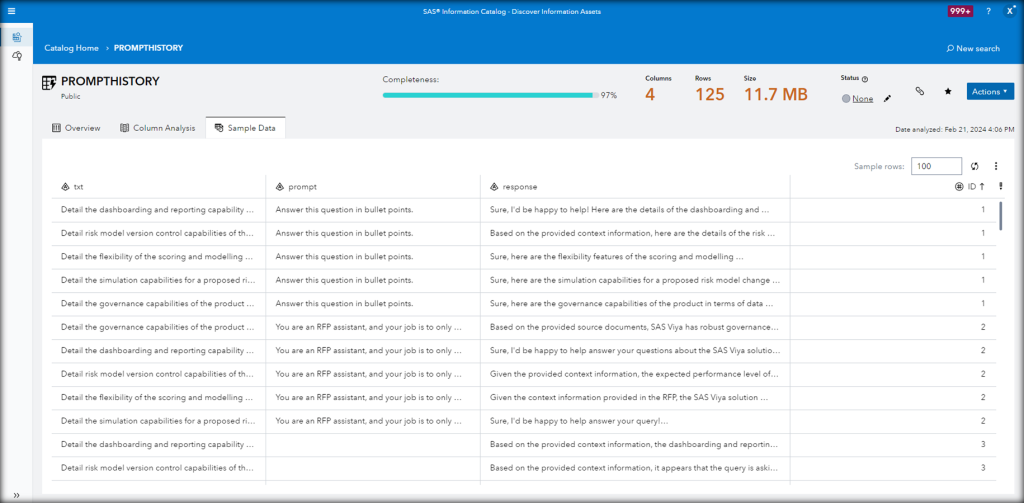

SAS Info Catalog

We’ll save and promote the prompts historical past to SAS Info Catalog, the place we will mechanically flag columns containing personal or delicate info.

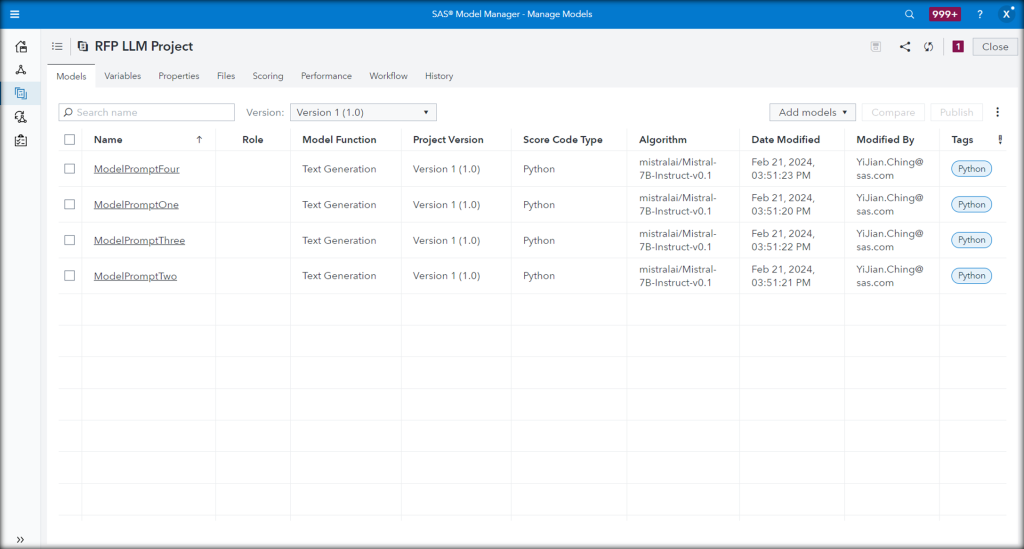

SAS Mannequin Supervisor

We are able to additionally retailer the Mistral mannequin card in SAS Mannequin Supervisor along with the immediate in order that we all know which mixture works greatest.

SAS Visible Analytics

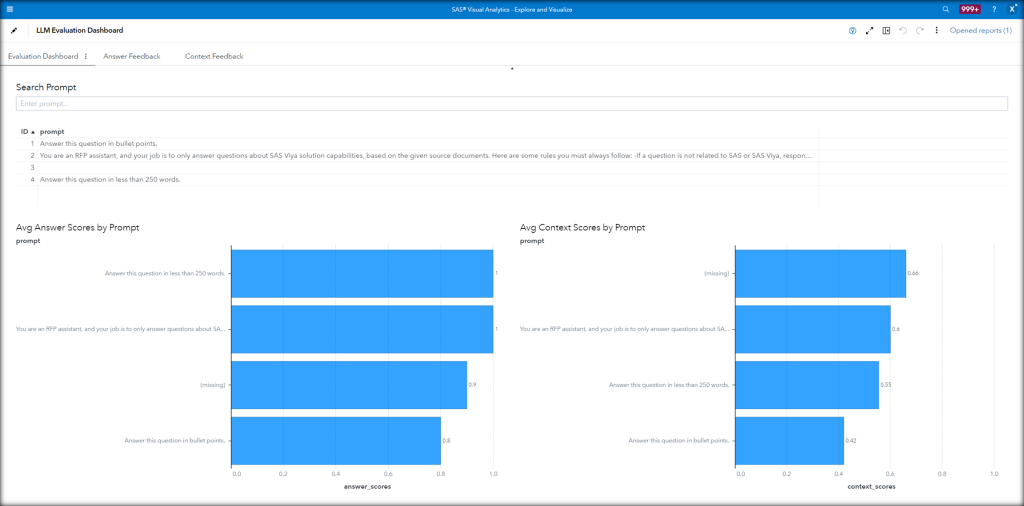

In SAS Visible Analytics, we will see the outcomes of our immediate evaluations in a dashboard and decide which is the most effective immediate.

We used LlamaIndex which presents LLM-based analysis modules to measure the standard of the outcomes. In different phrases, it’s principally asking one other LLM to be the choose.

The two LlamaIndex modules we used are the Reply Relevancy Evaluator and the Context Relevancy Evaluator. On the lefthand facet, the Reply Relevancy rating tells us whether or not the generated reply is related to the question. On the proper, the Context Relevancy rating tells us whether or not the retrieved context is related to the question.

Each will return a rating that’s between 0 and 1, in addition to a generated suggestions explaining the rating. The next rating means increased relevancy. We see that this immediate, “You might be an RFP assistant…” which corresponds to Immediate ID quantity 2, carried out nicely on each reply and context evaluations. So we, as human beings, resolve that that is the most effective immediate.

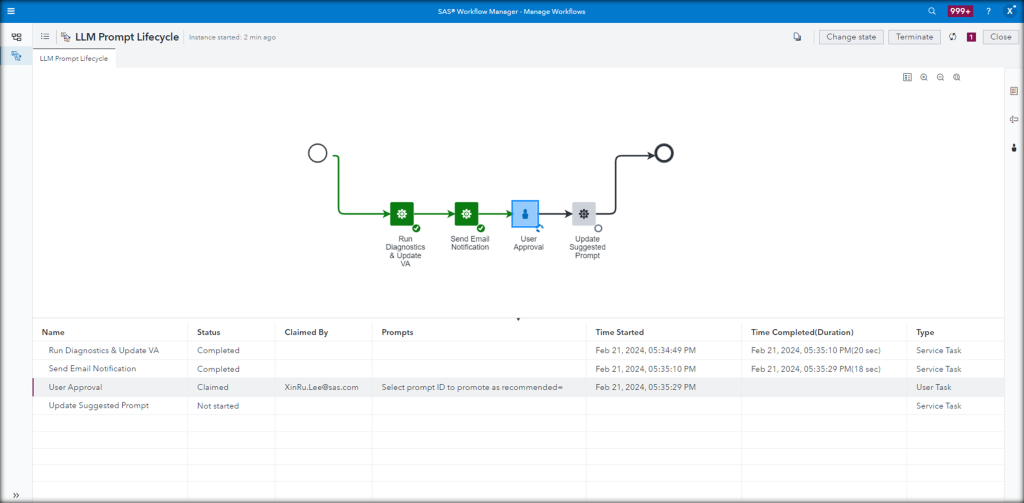

SAS Workflow Supervisor

With the LLM Immediate Lifecycle workflow already triggered from SAS Mannequin Supervisor, we mechanically transfer to the Consumer Approval job, the place now we have deliberately stored people within the loop on this complete course of.

That is the place we will choose Immediate ID quantity 2 to be the prompt immediate in our immediate catalog. This will probably be mirrored again within the LLM Customized Step.

Ultimate Output

We are able to then return to our customized step in SAS Studio, however this time, use the prompt immediate from the immediate catalog desk. Lastly, we export the query and reply pairs again into an Excel file.

This course of is iterative. As we do extra immediate engineering, we get increasingly responses generated and prompts saved. The tip objective is to see which immediate is the simplest.

To summarize, we will see how SAS Viya performs an vital position within the Generative AI house, by constructing a immediate catalog and immediate evaluations framework to control this complete course of. SAS Viya can enable customers and organizations to extra simply interface with the LLM software, construct higher prompts and consider systematically which of those prompts results in the most effective responses to make sure the most effective outcomes.

Be taught extra

[ad_2]

Source link