[ad_1]

Introduction

In pure language processing (NLP), you will need to perceive and successfully course of sequential information. Lengthy Quick-Time period Reminiscence (LSTM) fashions have emerged as a strong device for tackling this problem. They provide the aptitude to seize each short-term nuances and long-term dependencies inside sequences. Earlier than delving into the intricacies of LSTM language translation fashions, it’s essential to know the elemental idea of LSTMs and their position inside Recurrent Neural Networks (RNNs). This text offers a complete information to understanding, implementing, and evaluating LSTM fashions for language translation duties, with a deal with translating English sentences into Hindi. By means of a step-by-step strategy, we are going to discover the structure, preprocessing strategies, mannequin constructing, coaching, and analysis of LSTM fashions.

Studying Goal

Perceive the basics of LSTM structure.

Discover ways to preprocess sequential information for LSTM fashions.

Implement LSTM fashions for sequence prediction duties.

Consider and interpret LSTM mannequin efficiency.

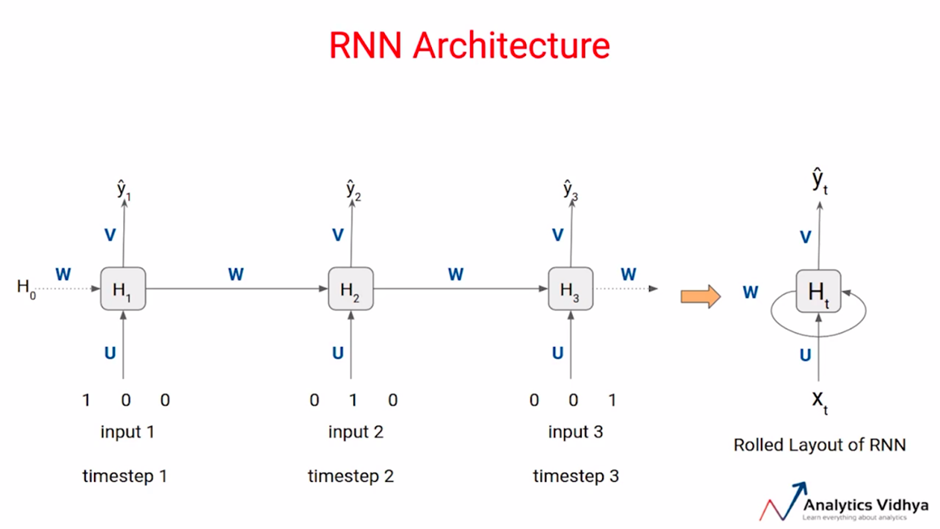

What’s RNN?

Recurrent Neural Networks (RNNs) serve a vital function within the space of neural networks as a result of their distinctive capacity to deal with sequential information successfully. In contrast to different kinds of neural networks, RNNs are particularly designed to seize dependencies inside sequential information factors.

Think about the instance of textual content information, the place every information level Xi represents a sequence of phrases or sentences. In pure language, the order of phrases issues considerably, in addition to the semantic relationships between them. Nonetheless, typical neural networks usually overlook this facet, treating the enter as an unordered set of options. Consequently, they battle to grasp the inherent construction and which means throughout the textual content.

RNNs deal with this limitation by sustaining relationships between phrases throughout the complete sequence. They obtain this by introducing a time axis, primarily making a looped construction the place every phrase within the enter sequence is processed sequentially, incorporating data from each the present phrase and the context supplied by earlier phrases.

This construction permits RNNs to seize short-term dependencies throughout the information. Nonetheless, they nonetheless face challenges in preserving long-term dependencies successfully. Within the context of the time axis illustration, RNNs encounter problem in sustaining robust connections between the primary and final phrases of the sequence. That is primarily because of the tendency for earlier inputs to have much less affect on later predictions, resulting in the potential lack of context and which means over longer sequences.

What’s LSTM?

Earlier than delving into LSTM language translation fashions, it’s important to know the idea of LSTMs.

LSTM stands for Lengthy Quick-Time period Reminiscence, which is a specialised sort of RNN. Because the identify suggests, LSTMs are designed to successfully seize each long-term and short-term dependencies inside sequential information. In case you’re fascinated with studying extra about RNNs and LSTMs, you possibly can discover the sources out there right here and right here. However let me offer you a concise overview of them.

LSTMs gained reputation for his or her capacity to deal with the constraints of conventional RNNs, significantly in sustaining each long-term and short-term dependencies inside sequential information. This achievement is facilitated by the distinctive construction of LSTMs.

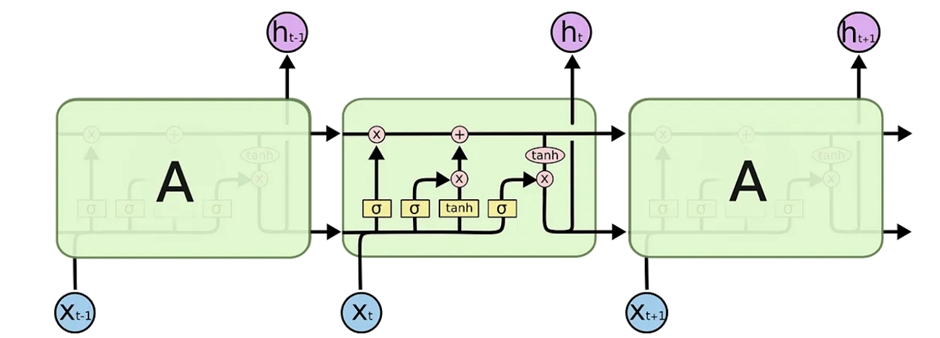

The LSTM construction might initially seem intricate, however I’ll simplify it for higher understanding. The time axis of an information level, labeled as xt0 to xtn, corresponds to particular person blocks representing cell states, denoted as h_t, which output the corresponding cell state. The yellow sq. packing containers signify activation capabilities, whereas the spherical pink packing containers signify pointwise operations. Let’s delve into the core idea.

The basic concept behind LSTMs is to handle long-term and short-term dependencies successfully. That is completed by selectively discarding unimportant parts x_t whereas retaining essential ones by way of id mapping. LSTMs could be distilled into three main gates, every serving a definite function.

1. Overlook Gate

The Overlook Gate determines the relevance of knowledge from the earlier state to be retained or discarded for the following state. It merges data from the earlier hidden state h_t-1 and the present enter x_t, passing it by way of a sigmoid perform to provide values between 0 and 1. Values nearer to 0 signify data to neglect, whereas these nearer to 1 point out data to maintain, achieved by way of acceptable weight backpropagation throughout coaching.

2. Enter Gate

The Enter Gate manages information updates to the cell state. It merges and processes the earlier hidden state h_t-1 and the present enter x_t by way of a sigmoid perform, producing values between 0 and 1. These values, indicating significance, are pointwise multiplied with the output of the tanh perform, which squashes values between -1 and 1 to manage the community. The ensuing product determines the related data to be added to the cell state.

3. Cell State

The Cell State combines the numerous data retained from the Overlook Gate (representing the necessary data from the earlier state) and the Enter Gate (representing the necessary data from the present state) by way of pointwise addition. This replace yields a brand new cell state c_t that the neural community deems related.

4. Output Gate

Lastly, the Output Gate determines the knowledge related to the following hidden state. It merges the earlier hidden state and the present enter right into a sigmoid perform to find out which data to retain. Concurrently, the modified cell state is handed by way of a tanh perform. The outputs are then multiplied to resolve the knowledge to hold ahead to the following hidden state.

It’s necessary to notice that the hidden state retains data from earlier enter states, making it helpful for predictions, and is handed because the output for the present state h_t.

Drawback Assertion

Our intention is to make the most of an LSTM sequence-to-sequence mannequin to translate English sentences into their corresponding Hindi counterparts.

For this, I’m taking a dataset from hugging face

Step 1: Loading the Information from Hugging Face

!pip set up datasetsfrom datasets import load_dataset

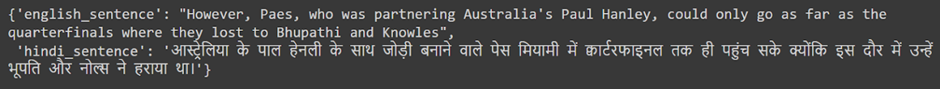

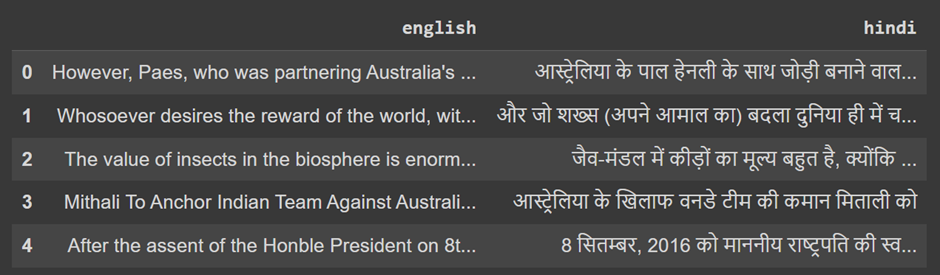

df=load_dataset(“Aarif1430/english-to-hindi”)df[‘train’][0]

import pandas as pd

da = pd.DataFrame(df[‘train’]) # Assuming you wish to load the prepare cut up

da.rename(columns={‘english_sentence’: ‘english’, ‘hindi_sentence’: ‘hindi’}, inplace=True)

da.head()

On this code, we set up the dataset library if not already put in. Then, use the load_dataset perform to load the English-Hindi dataset from Hugging Face. We convert the dataset into pandas DataFrame for additional processing and show the primary few rows to confirm the info loading.

Step 2: Importing Essential Libraries

import numpy as npimport stringfrom numpy import array, argmax, random, takeimport pandas as pdfrom keras.fashions import Sequentialfrom keras.layers import Dense, LSTM, Embedding, RepeatVectorfrom keras.preprocessing.textual content import Tokenizerfrom keras.callbacks import ModelCheckpointfrom keras.preprocessing.sequence import pad_sequencesfrom keras.fashions import load_modelfrom keras import optimizersfrom tensorflow.keras.fashions import Sequentialfrom tensorflow.keras.layers import Embedding, LSTMimport matplotlib.pyplot as pltimport tensorflow as tfimport warningswarnings.filterwarnings(“ignore”)

Right here, we’ve imported all the required libraries and modules required for information preprocessing, mannequin constructing, and analysis.

Step 3: Information Preprocessing

#Eradicating punctuations and changing textual content to lowercase for each languagesda[‘english’] = da[‘english’].str.change(‘[{}]’.format(string.punctuation), ”).str.decrease()da[‘hindi’] = da[‘hindi’].str.change(‘[{}]’.format(string.punctuation), ”).str.decrease()

# Discover indices of empty rows in each languageseng_empty_indices = da[da[‘english’].str.strip().astype(bool) == False].indexhin_empty_indices = da[da[‘hindi’].str.strip().astype(bool) == False].index

# Mix indices from each languages to take away empty rowsremove_indices = listing(set(eng_empty_indices) | set(hin_empty_indices))

# Eradicating empty rowsda.drop(remove_indices, inplace=True)

# Reset indicesda.reset_index(drop=True, inplace=True)

Right here , we preprocess the info by eradicating punctuation and changing textual content to lowercase for each English and Hindi sentences. Moreover, we deal with empty rows by discovering and eradicating them from the dataset.

Step 4: Tokenization and Sequence Padding

# Importing crucial librariesfrom tensorflow.keras.preprocessing.textual content import Tokenizerfrom tensorflow.keras.preprocessing.sequence import pad_sequences

# Initialize Tokenizer for English subtitlestokenizer_eng = Tokenizer()tokenizer_eng.fit_on_texts(da[‘english’])

# Convert textual content to sequences of integers for English subtitlessequences_eng = tokenizer_eng.texts_to_sequences(da[‘english’])

# Initialize Tokenizer for Hindi subtitlestokenizer_hin = Tokenizer()tokenizer_hin.fit_on_texts(da[‘hindi’])

# Convert textual content to sequences of integers for Hindi subtitlessequences_hin = tokenizer_hin.texts_to_sequences(da[‘hindi’])

# Pad sequences to make sure uniform lengthmax_length = 100 # Outline the utmost sequence lengthsequences_eng = pad_sequences(sequences_eng, maxlen=max_length, padding=’submit’)sequences_hin = pad_sequences(sequences_hin, maxlen=max_length, padding=’submit’)

# Confirm the vocabulary sizesvocab_size_eng = len(tokenizer_eng.word_index) + 1vocab_size_hin = len(tokenizer_hin.word_index) + 1

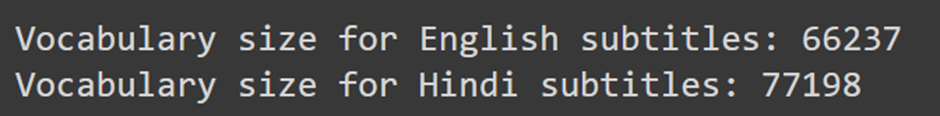

print(“Vocabulary measurement for English subtitles:”, vocab_size_eng)print(“Vocabulary measurement for Hindi subtitles:”, vocab_size_hin)

Right here, we import the required libraries for tokenization and sequence padding. Then, we tokenize the textual content information for each English and Hindi sentences and convert them into sequences of integers. We pad the sequences to make sure uniform size, and eventually, we print the vocabulary sizes for each languages.

Figuring out Sequence Lengths

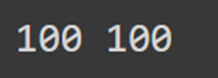

eng_length = sequences_eng.form[1] # Size of English sequenceshin_length = sequences_hin.form[1] # Size of Hindi sequencesprint(eng_length, hin_length)

On this, we’re figuring out the lengths of the sequences for each English and Hindi sentences. The size of a sequence refers back to the variety of tokens or phrases within the sequence.

Step 5: Splitting Information into Coaching and Validation Units

from sklearn.model_selection import train_test_split

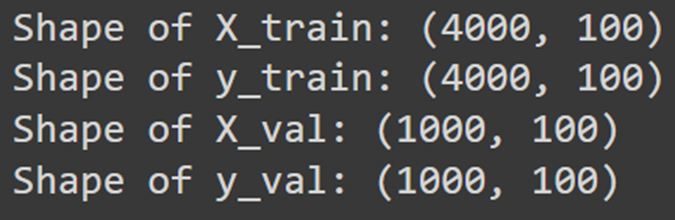

# Break up the coaching information into coaching and validation setsX_train, X_val, y_train, y_val = train_test_split(sequences_eng[:50000], sequences_hin[:50000], test_size=0.2, random_state=42)

# Confirm the shapes of the datasetsprint(“Form of X_train:”, X_train.form)print(“Form of y_train:”, y_train.form)print(“Form of X_val:”, X_val.form)print(“Form of y_val:”, y_val.form)

On this step, we’re splitting the preprocessed information into coaching and validation units.

Step 6: Constructing The LSTM Mannequin

from keras.fashions import Sequentialfrom keras.layers import Dense, LSTM, Embedding, RepeatVector

mannequin = Sequential()mannequin.add(Embedding(input_dim=vocab_size_eng, output_dim=128,input_shape=(eng_length,), mask_zero=True))mannequin.add(LSTM(items=512))mannequin.add(RepeatVector(n=hin_length))mannequin.add(LSTM(items=512, return_sequences=True))mannequin.add(Dense(items=vocab_size_hin, activation=’softmax’))

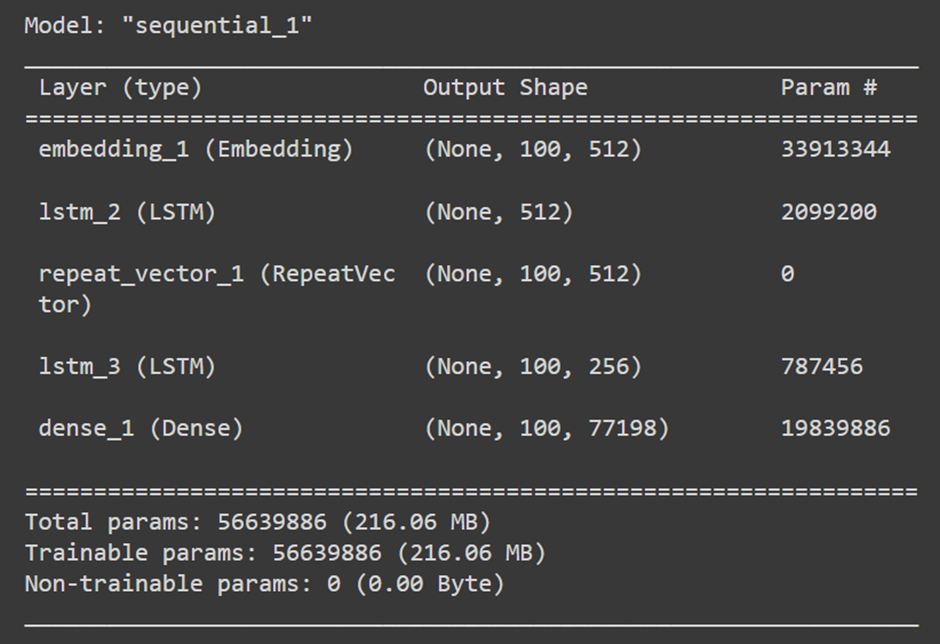

This step entails constructing the LSTM sequence-to-sequence mannequin for English to Hindi translation. Let’s break down the layers added to the mannequin:

The primary layer is an embedding layer (Embedding) which maps every phrase index to a dense vector illustration. It takes as enter the vocabulary measurement for English (vocab_size_eng), the output dimensionality (output_dim=128), and the enter form specified by the utmost sequence size for English (input_shape=(eng_length,)). Moreover, mask_zero=True is ready to disregard padded zeros.

Subsequent, we add an LSTM layer (LSTM) with 512 items, which processes the embedded sequences.

The RepeatVector layer repeats the output of the LSTM layer for hin_length instances, getting ready it to be fed into the following LSTM layer.

Then, we add one other LSTM layer with 512 items, set to return sequences (return_sequences=True), which is essential for sequence-to-sequence fashions.

Lastly, we add a dense layer (Dense) with a softmax activation perform to foretell the likelihood distribution over the Hindi vocabulary for every time step.

Printing the Mannequin Abstract

mannequin.abstract()

Step 7: Compiling and Coaching the Mannequin

from tensorflow.keras.optimizers import RMSprop

# Outline optimizerrms = RMSprop(learning_rate=0.001)

# Compile the modelmodel.compile(optimizer=rms, loss=”sparse_categorical_crossentropy”, metrics=[‘accuracy’])

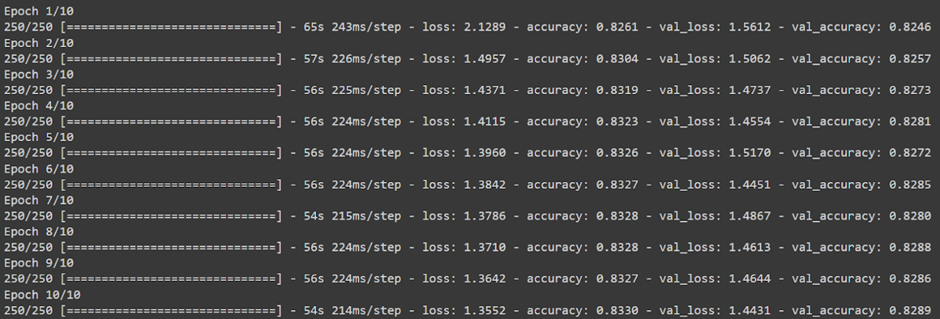

# Practice the modelhistory = mannequin.match(X_train, y_train, validation_data=(X_val, y_val), epochs=10, batch_size=32)

This step compiles the LSTM mannequin with rms optimizer, sparse_categorical_crossentropy loss perform, and accuracy metrics. Then, it trains the mannequin on the supplied information for 10 epochs, utilizing a batch measurement of 32. The coaching course of yields a historical past object capturing coaching metrics over epochs.

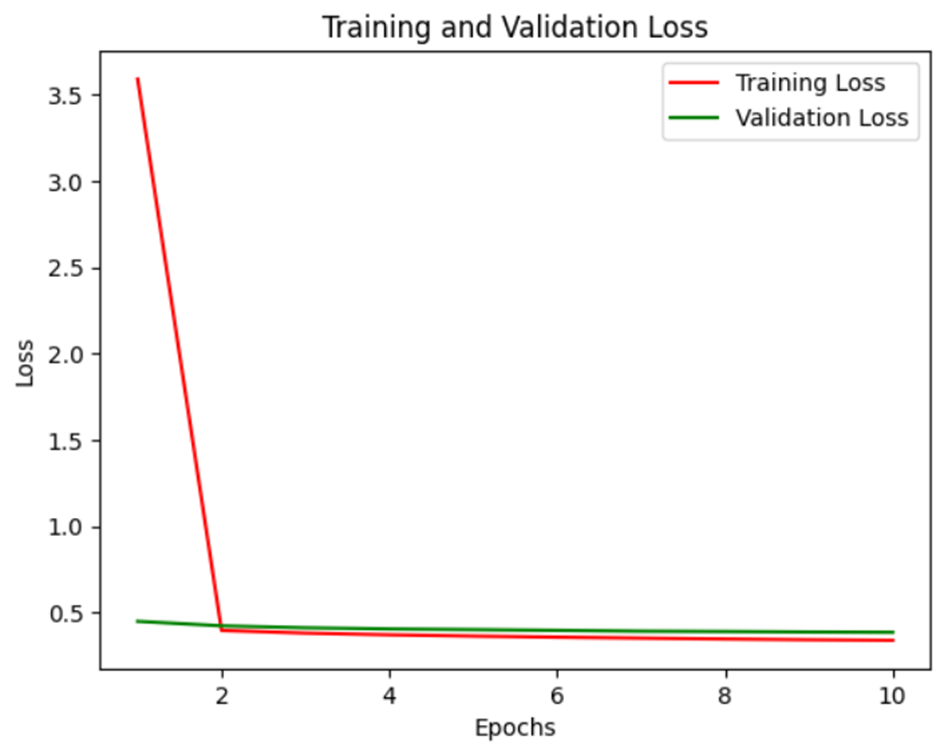

Step 8: Plotting Coaching and Validation Loss

import matplotlib.pyplot as plt

# Get the coaching historyloss = historical past.historical past[‘loss’]val_loss = historical past.historical past[‘val_loss’]epochs = vary(1, len(loss) + 1)

# Plot loss and validation loss with customized colorsplt.plot(epochs, loss, ‘r’, label=”Coaching Loss”) # Pink coloration for coaching lossplt.plot(epochs, val_loss, ‘g’, label=”Validation Loss”) # Inexperienced coloration for validation lossplt.title(‘Coaching and Validation Loss’)plt.xlabel(‘Epochs’)plt.ylabel(‘Loss’)plt.legend()plt.present()

This step entails plotting the coaching and validation loss over epochs to visualise the mannequin’s studying progress and potential overfitting.

Conclusion

This information navigates the creation of an LSTM sequence-to-sequence mannequin for English-to-Hindi language translation. It begins with an summary of RNNs and LSTMs, emphasizing their capacity to deal with sequential information successfully. The target is to translate English sentences into Hindi utilizing this mannequin.

Steps embrace information loading from Hugging Face, preprocessing to take away punctuation and deal with empty rows, and tokenization with sequence padding for uniform size. The LSTM mannequin is meticulously constructed with embedding, LSTM, RepeatVector, and dense layers. Coaching entails compiling the mannequin with an optimizer, loss perform, and metrics, adopted by becoming it to the dataset over epochs.

Visualizing coaching and validation loss provides insights into the mannequin’s studying progress. Finally, this information empowers customers with the talents to assemble LSTM fashions for language translation duties, offering a basis for additional exploration in NLP.

Incessantly Requested Questions

A. An LSTM (Lengthy Quick-Time period Reminiscence) sequence-to-sequence mannequin is a kind of neural community structure designed to translate sequences of information from one language to a different. It makes use of LSTM items to seize each short-term and long-term dependencies inside sequential information successfully.

A. The LSTM mannequin processes enter sequences, sometimes in English, and generates corresponding output sequences, normally in one other language like Hindi. It does so by studying to encode the enter sequence right into a fixed-size vector illustration after which decoding this illustration into the output sequence.

A. Preprocessing steps embrace eradicating punctuation, dealing with empty rows, tokenizing the textual content into sequences of integers, and padding the sequences to make sure uniform size.

A. Widespread analysis metrics embrace coaching and validation loss, which measure the discrepancy between predicted and precise sequences throughout coaching. Moreover, metrics like BLEU rating can be utilized to judge the mannequin’s efficiency.

A. Efficiency could be improved by experimenting with completely different mannequin architectures, adjusting hyperparameters reminiscent of studying fee and batch measurement, rising the scale of the coaching dataset, and using strategies like consideration mechanisms to deal with related components of the enter sequence throughout translation.

A. Sure, the LSTM mannequin could be tailored to translate between pairs of languages aside from English and Hindi. By coaching the mannequin on datasets containing sequences in several languages, it may be taught to carry out translation duties for these language pairs as properly.

[ad_2]

Source link

.jpg?format=1500w)