[ad_1]

In at this time’s digital age, smartphones and desktop internet browsers function the first instruments for accessing information and data. Nevertheless, the proliferation of web site litter — encompassing advanced layouts, navigation components, and extraneous hyperlinks — considerably impairs each the studying expertise and article navigation. This subject is especially acute for people with accessibility necessities.

To enhance the consumer expertise and make studying extra accessible, Android and Chrome customers might leverage the Studying Mode characteristic, which reinforces accessibility by processing webpages to permit customizable distinction, adjustable textual content dimension, extra legible fonts, and to allow text-to-speech utilities. Moreover, Android’s Studying Mode is supplied to distill content material from apps. Increasing Studying Mode to embody a big selection of content material and enhancing its efficiency, whereas nonetheless working regionally on the consumer’s machine with out transmitting information externally, poses a novel problem.

To broaden Studying Mode capabilities with out compromising privateness, now we have developed a novel on-device content material distillation mannequin. Not like early makes an attempt utilizing DOM Distiller — a heuristic method restricted to information articles — our mannequin excels in each high quality and flexibility throughout varied varieties of content material. We be certain that article content material does not go away the confines of the native surroundings. Our on-device content material distillation mannequin easily transforms long-form content material right into a easy and customizable structure for a extra nice studying journey whereas additionally outperforming the main different approaches. Right here we discover particulars of this analysis highlighting our method, methodology, and outcomes.

Graph neural networks

As an alternative of counting on difficult heuristics which can be troublesome to keep up and scale to a wide range of article layouts, we method this process as a completely supervised studying drawback. This data-driven method permits the mannequin to generalize higher throughout totally different layouts, with out the constraints and fragility of heuristics. Earlier work for optimizing the studying expertise relied on HTML or parsing, filtering, and modeling of a doc object mannequin (DOM), a programming interface mechanically generated by the consumer’s internet browser from website HTML that represents the construction of a doc and permits it to be manipulated.

The brand new Studying Mode mannequin depends on accessibility bushes, which offer a streamlined and extra accessible illustration of the DOM. Accessibility bushes are mechanically generated from the DOM tree and are utilized by assistive applied sciences to permit folks with disabilities to work together with internet content material. These can be found on Chrome Net browser and on Android via AccessibilityNodeInfo objects, that are offered for each WebView and native utility content material.

We began by manually amassing and annotating accessibility bushes. The Android dataset used for this undertaking contains on the order of 10k labeled examples, whereas the Chrome dataset accommodates roughly 100k labeled examples. We developed a novel device that makes use of graph neural networks (GNNs) to distill important content material from the accessibility bushes utilizing a multi-class supervised studying method. The datasets include long-form articles sampled from the net and labeled with lessons comparable to headline, paragraph, photos, publication date, and many others.

GNNs are a pure alternative for coping with tree-like information constructions, as a result of not like conventional fashions that always demand detailed, hand-crafted options to grasp the structure and hyperlinks inside such bushes, GNNs study these connections naturally. For example this, think about the analogy of a household tree. In such a tree, every node represents a member of the family and the connections denote familial relationships. If one have been to foretell sure traits utilizing standard fashions, options just like the “variety of rapid members of the family with a trait” is perhaps wanted. Nevertheless, with GNNs, such handbook characteristic crafting turns into redundant. By immediately feeding the tree construction into the mannequin, GNNs make the most of a message-passing mechanism the place every node communicates with its neighbors. Over time, data will get shared and gathered throughout the community, enabling the mannequin to naturally discern intricate relationships.

Returning to the context of accessibility bushes, because of this GNNs can effectively distill content material by understanding and leveraging the inherent construction and relationships inside the tree. This functionality permits them to establish and probably omit non-essential sections based mostly on the knowledge circulate inside the tree, guaranteeing extra correct content material distillation.

Our structure closely follows the encode-process-decode paradigm utilizing a message-passing neural community to categorise textual content nodes. The general design is illustrated within the determine under. The tree illustration of the article is the enter to the mannequin. We compute light-weight options based mostly on bounding field data, textual content data, and accessibility roles. The GNN then propagates every node’s latent illustration via the perimeters of the tree utilizing a message-passing neural community. This propagation course of permits close by nodes, containers, and textual content components to share contextual data with one another, enhancing the mannequin’s understanding of the web page’s construction and content material. Every node then updates its present state based mostly on the message obtained, offering a extra knowledgeable foundation for classifying the nodes. After a set variety of message-passing steps, the now contextualized latent representations of the nodes are decoded into important or non-essential lessons. This method permits the mannequin to leverage each the inherent relationships within the tree and the hand-crafted options representing every node, thereby enriching the ultimate classification.

A visible demonstration of the algorithm in motion, processing an article on a cell machine. A graph neural community (GNN) is used to distill important content material from an article. 1. A tree illustration of the article is extracted from the appliance. 2. Light-weight options are computed for every node, represented as vectors. 3. A message-passing neural community propagates data via the perimeters of the tree and updates every node illustration. 4. Leaf nodes containing textual content content material are labeled as important or non-essential content material. 5. A decluttered model of the appliance consists based mostly on the GNN output.

A visible demonstration of the algorithm in motion, processing an article on a cell machine. A graph neural community (GNN) is used to distill important content material from an article. 1. A tree illustration of the article is extracted from the appliance. 2. Light-weight options are computed for every node, represented as vectors. 3. A message-passing neural community propagates data via the perimeters of the tree and updates every node illustration. 4. Leaf nodes containing textual content content material are labeled as important or non-essential content material. 5. A decluttered model of the appliance consists based mostly on the GNN output.

We intentionally prohibit the characteristic set utilized by the mannequin to extend its broad generalization throughout languages and pace up inference latency on consumer units. This was a novel problem, as we wanted to create an on-device light-weight mannequin that might protect privateness.

Our closing light-weight Android mannequin has 64k parameters and is 334kB in dimension with a median latency of 800ms, whereas the Chrome mannequin has 241k parameters, is 928kB in dimension, and has a 378ms median latency. By using such on-device processing, we be certain that consumer information by no means leaves the machine, reinforcing our accountable method and dedication to consumer privateness. The options used within the mannequin could be grouped into intermediate node options, leaf-node textual content options, and ingredient place options. We carried out characteristic engineering and have choice to optimize the set of options for mannequin efficiency and mannequin dimension. The ultimate mannequin was reworked into TensorFlow Lite format to deploy as an on-device mannequin on Android or Chrome.

Outcomes

We educated the GNN for about 50 epochs in a single GPU. The efficiency of the Android mannequin on webpages and native utility take a look at units is introduced under:

The desk presents the content material distillation metrics in Android for webpages and native apps. We report precision, recall and F1-score for 3 lessons: non-essential content material, headline, and foremost physique textual content, together with macro common and weighted common by variety of situations in every class. Node metrics assess the classification efficiency on the granularity of the accessibility tree node, which is analogous to a paragraph degree. In distinction, phrase metrics consider classification at a person phrase degree, which means every phrase inside a node will get the identical classification.

The desk presents the content material distillation metrics in Android for webpages and native apps. We report precision, recall and F1-score for 3 lessons: non-essential content material, headline, and foremost physique textual content, together with macro common and weighted common by variety of situations in every class. Node metrics assess the classification efficiency on the granularity of the accessibility tree node, which is analogous to a paragraph degree. In distinction, phrase metrics consider classification at a person phrase degree, which means every phrase inside a node will get the identical classification.

In assessing the outcomes’ high quality on generally visited webpage articles, an F1-score exceeding 0.9 for main-text (basically paragraphs) corresponds to 88% of those articles being processed with out lacking any paragraphs. Moreover, in over 95% of instances, the distillation proves to be priceless for readers. Put merely, the overwhelming majority of readers will understand the distilled content material as each pertinent and exact, with errors or omissions being an rare prevalence.

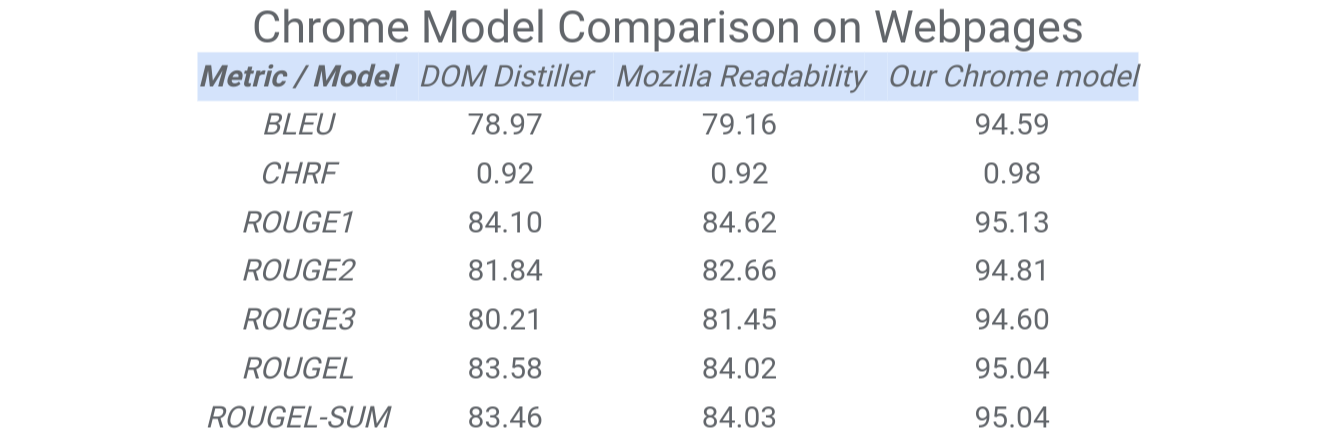

The comparability of Chrome content material distillation with different fashions comparable to DOM Distiller or Mozilla Readability on a set of English language pages is introduced within the desk under. We reuse the metrics from machine translation to check the standard of those fashions. The reference textual content is from the groundtruth foremost content material and the textual content from the fashions as speculation textual content. The outcomes present the wonderful efficiency of our fashions compared to different DOM-based approaches.

The desk presents the comparability between DOM-Distiller, Mozilla Readability and the brand new Chrome mannequin. We report text-based metrics, comparable to BLUE, CHRF and ROUGE, by evaluating the principle physique textual content distilled from every mannequin to a ground-truth textual content manually labeled by raters utilizing our annotation coverage.

The desk presents the comparability between DOM-Distiller, Mozilla Readability and the brand new Chrome mannequin. We report text-based metrics, comparable to BLUE, CHRF and ROUGE, by evaluating the principle physique textual content distilled from every mannequin to a ground-truth textual content manually labeled by raters utilizing our annotation coverage.

The F1-score of the Chrome content material distillation mannequin for headline and foremost textual content content material on the take a look at units of various extensively spoken languages demonstrates that the Chrome mannequin, particularly, is ready to assist a variety of languages.

The desk presents per language of F1-scores of the Chrome mannequin for the headline and foremost textual content lessons. The language codes correspond to the next languages: German, English, Spanish, French, Italian, Persian, Japanese, Korean, Portuguese, Vietnamese, simplified Chinese language and conventional Chinese language.

The desk presents per language of F1-scores of the Chrome mannequin for the headline and foremost textual content lessons. The language codes correspond to the next languages: German, English, Spanish, French, Italian, Persian, Japanese, Korean, Portuguese, Vietnamese, simplified Chinese language and conventional Chinese language.

Conclusion

The digital age calls for each streamlined content material presentation and an unwavering dedication to consumer privateness. Our analysis highlights the effectiveness of Studying Mode in platforms like Android and Chrome, providing an progressive, data-driven method to content material parsing via Graph Neural Networks. Crucially, our light-weight on-device mannequin ensures that content material distillation happens with out compromising consumer information, with all processes executed regionally. This not solely enhances the studying expertise but in addition reinforces our dedication to consumer privateness. As we navigate the evolving panorama of digital content material consumption, our findings underscore the paramount significance of prioritizing the consumer in each expertise and safety.

Acknowledgements

This undertaking is the results of joint work with Manuel Tragut, Mihai Popa, Abodunrinwa Toki, Abhanshu Sharma, Matt Sharifi, David Petrou and Blaise Aguera y Arcas. We sincerely thank our collaborators Gang Li and Yang Li. We’re very grateful to Tom Small for aiding us in getting ready the publish.

[ad_2]

Source link