[ad_1]

Think about in case your job was to kind a large pile of 40,000 stones into about 200 buckets based mostly on their distinctive properties.

Every stone must be rigorously examined, categorized and positioned within the right bucket, which takes about 5 minutes per stone. Happily, you’re not alone however a part of a workforce of stone sorters explicitly educated for this job.

As soon as all of the stones are sorted, your workforce will analyze every bucket and write detailed essays in regards to the stones in them. Total, this complete course of, together with coaching and reviewing, will take your workforce someplace between 4,000 and eight,000 hours, equal to 2 to 4 individuals working full time for a 12 months.

This grueling sorting course of mirrors what authorities businesses face when coping with hundreds of public feedback on proposed rules. They need to digest and reply to every level raised by the general public (together with company stakeholders) in response to a proposed regulation. They need to achieve this to make sure they’re protected against a lawsuit. Therefore, a rigorous response is critical each time public feedback are offered. Happily, with developments in textual content analytics and GenAI, this job might be managed successfully whereas sustaining accuracy.

Enhancing authorities processes with textual content analytics and GenAI

A sturdy method has been developed, combining pure language processing (NLP), textual content analytics and a big language mannequin (LLM) to streamline dealing with public enter. Whereas know-how takes over a big a part of the in any other case guide work, the subject material consultants keep on the heart of the method.

By means of textual content analytics, linguistic guidelines are used to establish and refine how every distinctive assertion aligns with a distinct facet of the regulation. This eliminates the necessity for arduous guide duties. Provided that authorities businesses typically search suggestions on as much as 200 completely different points of regulation, this automated matching course of considerably boosts effectivity. Following this, the LLM creates preliminary abstract statements for every regulation facet based mostly on the recognized statements, which a smaller workforce can fine-tune. This modern method assists within the simultaneous dealing with of a number of rules, empowering the workforce to make use of their inventive experience extra successfully.

Placing GenAI and textual content analytics to the check

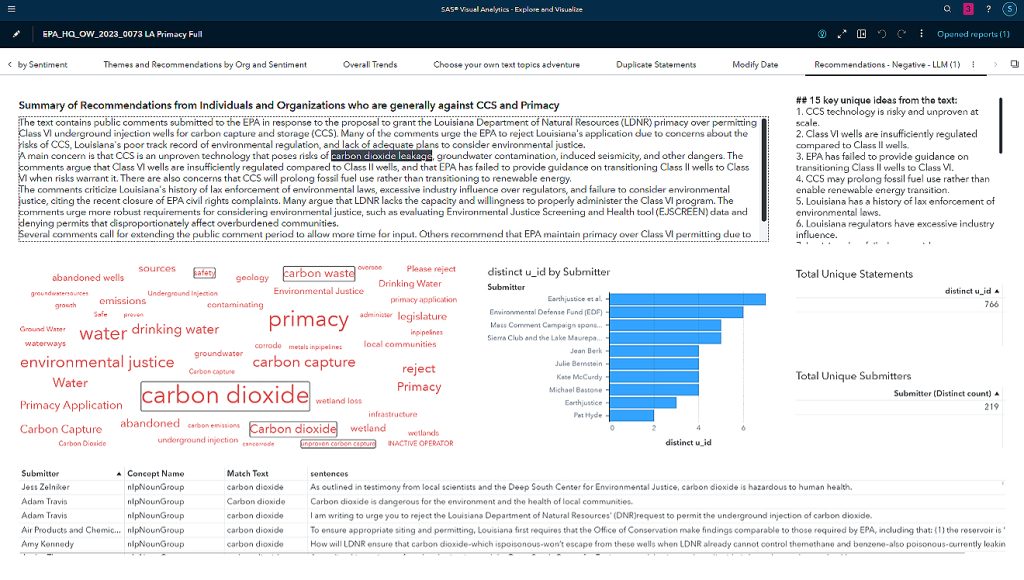

We used SAS® Visible Analytics to use this course of to a proposed regulation from the US Environmental Safety Company (EPA) relating to the switch of oversight of carbon seize wells to the state of Louisiana. With greater than 40,000 feedback obtained, we recognized greater than 10,000 distinctive statements. For example this course of, we first used textual content analytics to establish statements containing a concrete advice from organizations that had been damaging towards the regulation general. We then used GenAI to generate abstract data. We developed an interactive dashboard inside SAS Visible Analytics to assist customers confirm the abstract of suggestions from people and organizations who typically oppose the regulation or points of it.

In Determine 1 of the dashboard, the abstract descriptions offered within the prime and top-right containers spotlight how the LLM summarized the important thing data. By asking particular questions as headers for every field and offering a abstract and 15 key distinctive concepts, we created an correct and compelling description of how the general public negatively perceives the proposed regulation and the way it may be concretely improved.

Whereas tens of hundreds of statements are submitted for this regulation, utilizing NLP and textual content analytics as a pre-filter allowed us to feed solely round 5% of probably the most pertinent statements to the LLM, enabling it to generate an correct and traceable summarization at much less value. The abstract data generated by the LLM might be verified by drilling down into the statements that fed it. Within the instance, the LLM means that carbon dioxide leakage is likely one of the main public considerations. For example, our textual content analytics course of recognized key phrases akin to “carbon dioxide,” “carbon waste,” “security,” and “unproven carbon seize,” aligning with the LLM outcomes illustrated within the phrase cloud. By means of the interactive capabilities provided by SAS Visible Analytics, we might drill down into statements associated to those themes to confirm the responses generated by the LLM.

Equally, the dashboard allows exploration of the opposite essential themes recommended by the LLM, together with groundwater contamination, ample oversight by Louisiana and concern over nicely abandonment.

This integration of NLP and SAS Visible Analytics will help different customers by:

Avoiding hallucinations: The NLP and textual content analytics pre-filtering course of assimilates probably the most related supply information from varied paperwork, guaranteeing the outputs are extra correct and dependable.

Enhancing time to worth: By pre-filtering the information, a smaller LLM can deal with GenAI duties extra effectively, resulting in faster outcomes.

Making certain privateness and safety: Utilizing an area vector database for fine-tuning generative fashions is feasible. This provides customers solely related embeddings to the LLMs by way of APIs or localized situations of the LLM, guaranteeing the privateness and safety of delicate information.

Lowering prices: Textual content analytics and NLP considerably cut back the quantity of data despatched to the LLMs. In some instances, just one – 5% of the general information is used for solutions. This eliminates the necessity for extreme exterior API calls and reduces the computational sources required for localized LLMs.

Supporting verification: SAS® Viya® allows end-to-end verification and traceability of outcomes, serving to customers to confirm data and hint it again to the statements from which the summaries had been derived, probably hundreds of statements. This traceability function enhances transparency and belief within the generated outputs.

As governments use LLMs to enhance productiveness and communication, it is going to be mandatory to take care of the accuracy and rigor of present strategies whereas managing the prices of recent applied sciences. This method of integrating NLP and textual content analytics with LLMs permits a authorities to carry out prolonged and complicated duties simpler, sooner, and fewer expensively with out among the dangers inherent in utilizing GenAI.

Be taught extra about what SAS Visible Analytics can do to your group

[ad_2]

Source link