[ad_1]

Most of us have skilled the annoyance of discovering an necessary e-mail within the spam folder of our inbox.

For those who test the spam folder recurrently, you would possibly get aggravated by the inaccurate filtering, however not less than you’ve in all probability averted vital hurt. However if you happen to didn’t know to test spam, you’ll have missed necessary data. Possibly it was that assembly invite out of your supervisor, a job supply or perhaps a authorized discover. In these circumstances, the error would have induced greater than frustration. In our digital society, we anticipate our emails to function reliably.

Equally, we belief our automobiles to function reliably – whether or not we drive an autonomous or conventional car. We’d be horrified if our automobiles randomly turned off whereas driving 80 miles an hour on a freeway. An error within the system of that proportion would seemingly trigger vital hurt to the motive force, passenger and different drivers on the highway.

These examples relate to the idea of robustness in know-how. Simply as we anticipate our emails to function precisely and our automobiles to drive reliably, we anticipate our AI fashions to function reliably and safely. An AI system that modifications outputs relying on the day and the part of the moon is ineffective to most organizations. And if points happen, we have to have mechanisms that assist us assess and handle potential dangers. Beneath, we describe a couple of methods for organizations to verify their AI fashions are sturdy.

The significance of human oversight and monitoring

Organizations ought to contemplate using a human-in-the-loop method to create a stable basis for sturdy AI programs. This method includes people actively taking part in creating and monitoring mannequin effectiveness and accuracy. In less complicated phrases, information scientists use particular instruments to mix their data with technological capabilities. Additionally, workflow administration instruments can assist organizations set up automated guardrails when creating AI fashions. The workflows are essential in guaranteeing that the correct subject material consultants are concerned in creating the mannequin.

As soon as the AI mannequin is created and deployed, steady monitoring of its efficiency turns into necessary. Monitoring includes recurrently amassing information on the mannequin’s efficiency based mostly on its meant targets. Monitoring checkpoints are important to flag errors or sudden outcomes earlier than deviations in efficiency happen. If deviations do happen, information scientists inside the group can assess what modifications must be made – whether or not retraining the mannequin or discontinuing its use.

Workflow administration can even make sure that all future modifications are made with session or approval from the subject material consultants. This human oversight provides an additional layer of reliability to the method. Moreover, workflow administration can assist future auditing wants by monitoring feedback and historical past of modifications.

Validating and auditing in opposition to a spread of inputs

Sturdy information programs are examined in opposition to various inputs and real-world situations to make sure they will accommodate change whereas avoiding mannequin decay or drift. Testing reduces unexpected hurt, ensures consistency of efficiency, and helps produce correct outcomes.

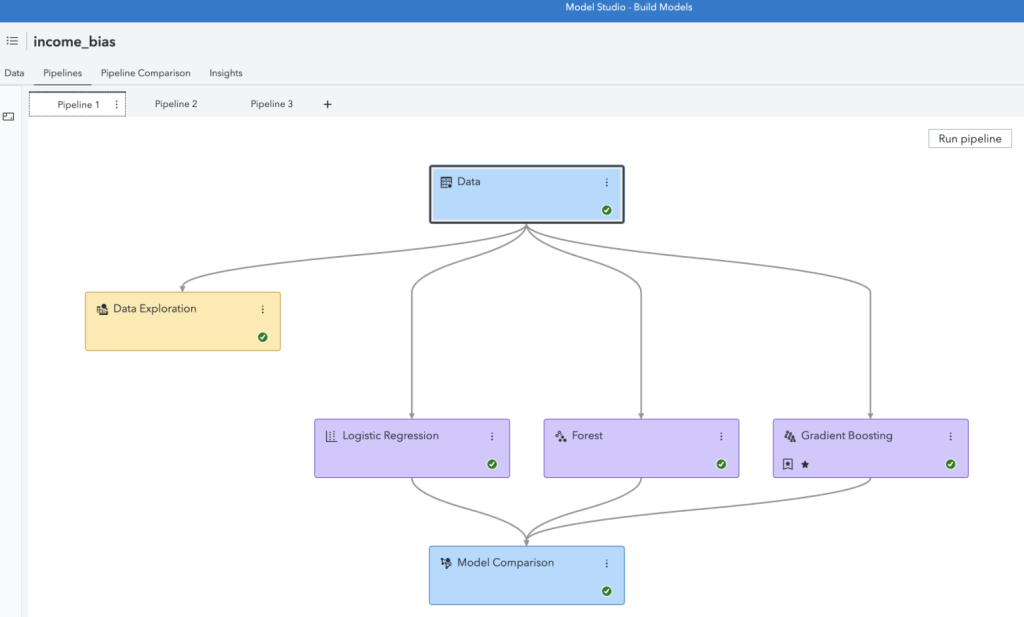

One of many methods customers can take a look at their mannequin is by creating a number of mannequin pipelines. Mannequin pipelines permit customers to run the mannequin underneath completely different ranges of inputs and evaluate the performances underneath differing situations. The comparability permits the customers to pick the best mannequin, usually referred to as the champion mannequin.

As soon as the champion mannequin has been chosen, organizations can recurrently validate the mannequin to establish when the mannequin begins drifting from the best state. Organizations actively observe shifts in enter variable distributions (information drift) and output variable distributions (idea drift) to forestall mannequin drift. This method is fortified by creating efficiency experiences that assist deployed fashions stay correct and related over time.

Utilizing fail safes for out-of-bound sudden behaviors

If situations don’t assist correct and constant output, sturdy programs have built-in safeguards to reduce the hurt. Alerts will be put in place to observe mannequin efficiency and point out mannequin decay. For example, organizations can outline KPI worth units for every mannequin throughout deployment – similar to an anticipated charge for misclassification. If the mannequin misclassification charge ultimately falls outdoors the KPI worth set, it notifies the person that an intervention is required.

Alerts can even assist point out when a mannequin is experiencing adversarial assaults, a typical concern round mannequin robustness. Adversarial assaults are designed to idiot AI programs by making small, imperceptible enter modifications. One technique to mitigate the influence of those assaults is by adversarial coaching, which includes coaching the AI system on deceptive inputs or inputs deliberately modified to idiot the system. This intentional coaching helps the system study to establish and resist adversarial assaults, constructing system robustness.

Adaptable AI programs for real-world calls for

Techniques that solely perform underneath very best situations are usually not helpful for some organizations that want AI fashions that may scale with and adapt to modifications. A system’s robustness relies on a company’s capability to validate and audit the outcomes on numerous inputs, fail protected for any sudden behaviors and human-in-the-loop design. By taking these steps, we will make sure that our data-driven programs function reliably and safely and reduce potential dangers, thus lowering the potential of hazards similar to vehicular glitches or important emails being misclassified as spam.

Wish to study extra? Get in on this dialogue about reliable AI utilizing SAS®

Kristi Boyd and Vrushali Sawant contributed to this text

[ad_2]

Source link