[ad_1]

Introduction

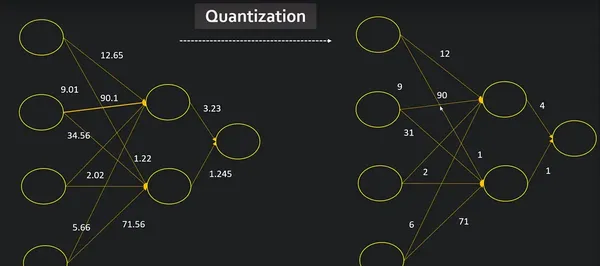

Let’s say you’ve a gifted buddy who can acknowledge patterns, like figuring out whether or not a picture incorporates a cat or a canine. Now, this buddy has a exact manner of doing issues, like he has a dictionary in his head. However, right here’s the issue: this encyclopedia is large and requires vital effort and time to make use of.

Take into account simplifying the method, like changing that massive encyclopedia right into a handy cheat sheet. That is much like the way in which mannequin quantization works for intelligent laptop packages. It takes these clever packages, which could be excessively massive and sluggish, and streamlines them, making them quicker and fewer demanding on the machine. How does this work? Nicely, it’s much like rounding off troublesome figures. If the numbers in your buddy’s encyclopedia have been actually in depth and complete, you’ll be able to determine to simplify them to hurry up the method. Mannequin quantization methods, scale back the ‘numbers’ that the pc makes use of to acknowledge objects.

So why ought to we care? Think about that your buddy helps you in your smartphone. You need it to have the ability to acknowledge objects quick with out taking on an excessive amount of battery or house. Mannequin quantization makes your telephone’s mind function extra successfully, much like a intelligent buddy who can shortly establish issues with out having to seek the advice of a big encyclopedia each time.

Studying Targets

Look at key parts of quantization, encompassing weights and activations in a neural community.

Discover numerous methods for mannequin quantization to optimize effectivity and scale back reminiscence utilization.

Perceive how quantization impacts a easy neural community by analyzing its structure and efficiency.

Examine the dimensions and efficiency of a quantized convolutional neural community towards its authentic counterpart.

This text was printed as part of the Knowledge Science Blogathon.

What’s Mannequin Quantization?

Quantization is a technique that may enable fashions to run quicker and use much less reminiscence. By changing 32-bit floating-point numbers (float32 knowledge kind) to lower-precision codecs resembling 8-bit integers (int8 knowledge kind), we will scale back the computational necessities of our mannequin.

Quantization is the method of decreasing the precision of a mannequin’s weights and activations from floating-point to smaller bit-width representations. It goals to extend the adaptability of the mannequin for deployment on constrained gadgets resembling smartphones and embedded techniques by decreasing reminiscence footprint and rising inference pace.

Why Do We Want Mannequin Quantization?

Mannequin quantization is crucial for a lot of causes, particularly when deploying machine studying fashions in real-world situations. Listed here are the most important causes for the necessity for mannequin quantization:

Restricted Reminiscence Assets: Useful resource-constrained gadgets, resembling cellphones, IoT gadgets, and edge computing gadgets, usually have restricted reminiscence. Quantization considerably reduces the reminiscence footprint of fashions, making them extra possible to deploy on such gadgets.

Decrease Vitality Consumption: Quantized fashions usually require much less computation, resulting in decrease power consumption throughout each coaching and inference. That is particularly essential for battery-powered gadgets and environments the place power effectivity is a precedence.

Sooner Inference: Quantization reduces the precision of weights and activations, leading to fewer arithmetic operations throughout inference. This leads to quicker mannequin execution, which is essential for real-time functions and companies that require fast responses.

What Are The Key Elements Of Mannequin Quantization?

Mannequin quantization is a way utilized in machine studying to cut back the reminiscence necessities and computational price of a skilled mannequin. The objective is to make fashions extra environment friendly, particularly for deployment on resource-constrained gadgets resembling cellphones, embedded techniques or edge gadgets. This course of includes representing the parameters (weights and activations) of the mannequin utilizing a decreased variety of bits.

Listed here are the important thing facets of mannequin quantization:

1. Parameter Quantization

This includes decreasing the accuracy of the mannequin’s weights. Sometimes, deep studying fashions use 32-bit floating-point numbers to signify weights. In quantization, these values are changed with lower-bit representations, resembling 8-bit integers. This reduces the reminiscence footprint of the mannequin and quickens inference.

2. Activation Quantization

Along with quantizing the weights, quantization could be utilized to the activation values produced by every layer throughout inference. Activation quantization includes representing intermediate characteristic maps with lower-precision knowledge sorts, additional decreasing reminiscence necessities.

3. Publish-training and Quantization-aware Coaching

Mannequin quantization could be carried out after a mannequin has been skilled (quantization after coaching) or in the course of the coaching course of (quantization-aware coaching). Quantization-aware coaching includes adjusting the coaching course of to consider low accuracy throughout ahead and backward passes.

4. Dynamic Quantization

In dynamic quantization, the accuracy of the mannequin’s weights is dynamically optimized throughout inference primarily based on the noticed vary of activation values. This permits larger flexibility and might enhance mannequin efficiency.

Advantages of Mannequin Quantization

The advantages of mannequin quantization embody:

Low Mannequin Measurement: Quantized fashions have a small reminiscence footprint, making them appropriate for deployment on gadgets with restricted storage.

Sooner estimation: Low-precision calculations require fewer computational assets, leading to quicker estimation instances. That is particularly essential for real-time functions.

Vitality Effectivity: Decrease computational necessities additionally end in decrease power consumption, which is essential for gadgets with restricted battery life.

Regardless of these benefits, the mannequin comes with quantification challenges. Decrease precision can result in a lack of mannequin accuracy, and discovering the best steadiness between mannequin dimension, inference pace, and accuracy is usually a trade-off. Reaching optimum outcomes for a particular use case requires cautious consideration and typically fine-tuning.

Mannequin Quantization

Publish-training quantization consists of basic methods to cut back CPU and {hardware} accelerator latency, processing, energy, and mannequin dimension with little degradation in mannequin accuracy. These methods could be carried out on an already-trained float TensorFlow mannequin and utilized throughout TensorFlow Lite conversion.

import tensorflow as tf

from tensorflow import keras

import numpy as np

import pathlib

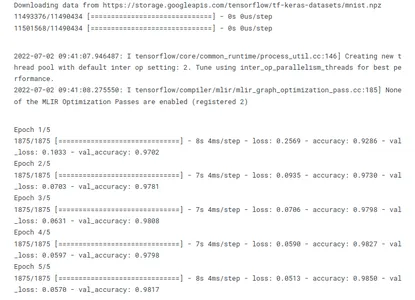

Coaching The Mannequin

mnist = keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

# Normalize the enter picture so that every pixel worth is between 0 to 1.

train_images = (train_images / 255.0).astype(np.float32)

test_images = (test_images / 255.0).astype(np.float32)

# Outline the mannequin structure

mannequin = keras.Sequential([

keras.layers.InputLayer(input_shape=(28, 28)),

keras.layers.Reshape(target_shape=(28, 28, 1)),

keras.layers.Conv2D(filters=12, kernel_size=(3, 3), activation=tf.nn.relu),

keras.layers.MaxPooling2D(pool_size=(2, 2)),

keras.layers.Flatten(),

keras.layers.Dense(10)

])

# Practice the digit classification mannequin

mannequin.compile(optimizer=”adam”,

loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[‘accuracy’])

mannequin.match(

train_images,

train_labels,

epochs=5,

validation_data=(test_images, test_labels)

)

models_dir = pathlib.Path(‘fashions’)

models_dir.mkdir(exist_ok=True, mother and father=True)

mannequin.save(f'{models_dir}/tf_model.h5′)

Numerous Quantization Methods

Quantization is a course of utilized in digital sign processing and knowledge compression to cut back the variety of bits wanted to signify knowledge with out dropping an excessive amount of data. Within the context of machine studying and neural networks, quantization is usually employed to cut back the precision of weights and activations, resulting in extra environment friendly mannequin deployment on {hardware} with restricted assets. Listed here are some numerous quantization methods:

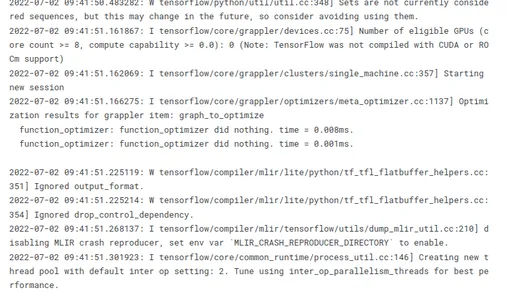

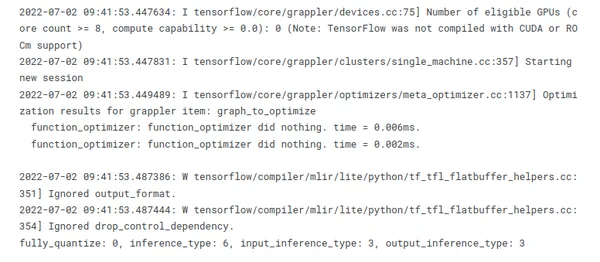

1. Convert to a TensorFlow Lite Mannequin

TensorFlow Lite converts weights to 8-bit precision as a part of the mannequin conversion from TensorFlow graphdefs to TensorFlow Lite’s flat buffer format.

converters = tf.lite.TFLiteConverter.from_keras_model(mannequin)

tflite_models = converters.convert()

tflite_model_files = models_dir/”tflite_model.tflite”

tflite_model_files.write_bytes(tflite_models)

2. Dynamic Quantization

The only type of post-training quantization methods statically quantizes solely the weights from floating level to integer, which has 8 bits of precision. At inference, weights are transformed from 8-bits of precision tofloating level and computed utilizing floating-point kernels. This conversion is finished as soon as and cached to cut back latency.

converter = tf.lite.TFLiteConverter.from_keras_model(mannequin)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tflite_dynamic_quant_model = converter.convert()

tflite_dynamic_quant_model_file = models_dir/”tflite_dynamic_quant_model.tflite”

tflite_dynamic_quant_model_file.write_bytes(tflite_dynamic_quant_model)

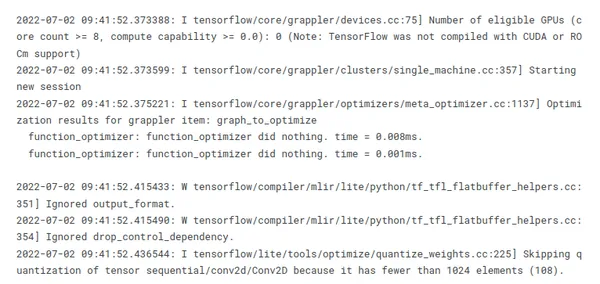

3. Integer Quantization

Integer quantization is an optimization technique that converts 32-bit floating-point numbers (resembling weights and activation outputs) to the closest 8-bit fixed-point numbers. This leads to a smaller mannequin and elevated inferencing pace.

To quantize the variable knowledge (resembling mannequin enter/output and intermediates between layers), it’s essential present a Consultant Dataset. This can be a generator perform that gives a set of enter knowledge that’s massive sufficient to signify typical values. It permits the converter to estimate a dynamic vary for all of the variable knowledge. (The dataset doesn’t have to be distinctive in comparison with the coaching or analysis dataset.)

To assist a number of inputs, every consultant knowledge level is an inventory, and components within the checklist are fed to the mannequin in keeping with their indices.

def representative_data_gen():

for input_value in tf.knowledge.Dataset.from_tensor_slices(train_images).batch(1).take(100):

yield [input_value]

converter = tf.lite.TFLiteConverter.from_keras_model(mannequin)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_data_gen

# Be certain that if any ops cannot be quantized, the converter throws an error

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# Set the enter and output tensors to uint8

converter.inference_input_type = tf.uint8

converter.inference_output_type = tf.uint8

tflite_int_quant_model = converter.convert()

tflite_int_quant_model_file = models_dir/”tflite_int_quant_model.tflite”

tflite_int_quant_model_file.write_bytes(tflite_dynamic_quant_model)

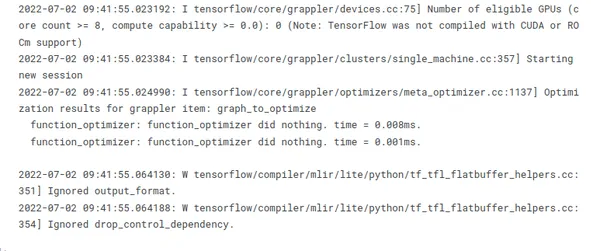

4. Float 16 Quantization

Changing weights to 16-bit floating level values throughout mannequin conversion from TensorFlow to TensorFlow Lite’s flat buffer format, leads to a 2x discount in mannequin dimension. Some {hardware}, like GPUs, cancompute natively on this decreased precision arithmetic, realizing a speedup over conventional floating level execution. The Tensorflow Lite GPU delegate could be configured to run on this manner.

Nevertheless, a mannequin transformed to float16 weights can nonetheless run on the CPU with out further modification: the float16 weights are upsampled to float32 previous to the primary inference. This allows a big discount in mannequin dimension in trade for a minimal affect on latency and accuracy.

converter = tf.lite.TFLiteConverter.from_keras_model(mannequin)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

tflite_float16_quant_model = converter.convert()

tflite_float16_quant_model_file = models_dir/”tflite_float16_quant_model.tflite”

tflite_float16_quant_model_file.write_bytes(tflite_float16_quant_model)

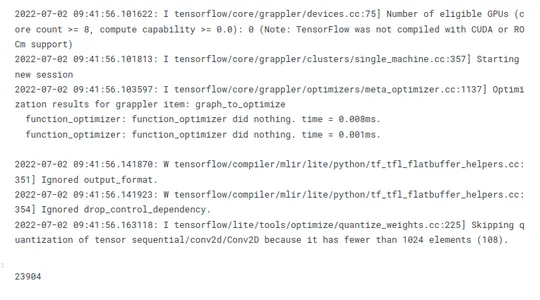

5. 16×8 Quantization

Changing activations to 16-bit integer values and weights to 8-bit integer values throughout mannequin conversion from TensorFlow to TensorFlow Lite’s flat buffer format can enhance the accuracy of the quantized mannequin considerably, when activations are delicate to the quantization, whereas nonetheless attaining nearly 3-4x discount in mannequin dimension. Furthermore, this absolutely quantized mannequin could be consumed by integer-only {hardware} accelerators.

converter = tf.lite.TFLiteConverter.from_keras_model(mannequin)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_ops = [tf.lite.OpsSet.

EXPERIMENTAL_TFLITE_BUILTINS_ACTIVATIONS_INT16_WEIGHTS_INT8]

tflite_16x8_quant_model = converter.convert()

tflite_16x8_quant_model_file = models_dir/”tflite_16x8_quant_model.tflite”

tflite_16x8_quant_model_file.write_bytes(tflite_16x8_quant_model)

How Does Quantization of a Easy Neural Community Work?

Let’s apply quantization to a neural community. We’ll create a easy community with one hidden layer, then we’ll quantize and dequantize its weights.

In PyTorch, quantization is achieved utilizing a QuantStub and DeQuantStub to mark the factors within the mannequin the place the info must be transformed to quantized type and transformed again to floating level type, respectively. After defining the community with these stubs, we use the torch.quantization.put together and torch.quantization.convert features to quantize the mannequin.

The method of quantizing a mannequin in PyTorch includes the next steps:

Outline a neural community and mark the factors within the mannequin the place the info must be transformed to quantized type and transformed again to floating level type. That is achieved utilizing a quantization-aware coaching strategy, the place particular layers or operations are recognized for quantization.

Specify a quantization configuration for the mannequin utilizing torch.quantization.QConfig. This configuration defines how the mannequin needs to be quantized, together with precision settings and goal gadgets.

Put together the mannequin for quantization utilizing torch.quantization.put together. This step includes setting the mannequin to the coaching mode and inserting pretend quantization modules to simulate quantization throughout coaching.

Calibrate the mannequin on a calibration dataset. Throughout calibration, the mannequin is run on a calibration dataset, and the vary of the activations is noticed. That is used to find out the parameters for quantization, making certain an correct illustration of the info.

Convert the ready and calibrated mannequin to a quantized model utilizing torch.quantization.convert. This perform modifications these modules to make use of quantized weights, finishing the method and producing a quantized model of the unique neural community.

Import all needed libraries:

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

from torch.utils.knowledge import DataLoader

import sys

import io

# Outline the community structure

class Internet(nn.Module):

def __init__(self):

tremendous(Internet, self).__init__()

self.quant = torch.quantization.QuantStub()

self.fc1 = nn.Linear(28 * 28, 128)

self.fc2 = nn.Linear(128, 10)

self.dequant = torch.quantization.DeQuantStub()

def ahead(self, x):

# Reshape the enter tensor to a vector of dimension 28*28

x = x.view(-1, 28 * 28)

x = self.quant(x)

x = torch.relu(self.fc1(x))

# Apply the second absolutely related layer

x = self.fc2(x)

x = self.dequant(x)

return x

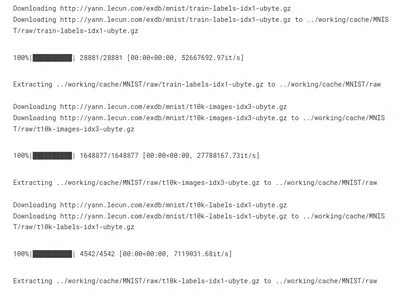

# Load the MNIST dataset

rework = transforms.Compose([transforms.ToTensor(), transforms.Normalize

((0.1307,), (0.3081,))])

trainset = torchvision.datasets.MNIST(root=”../working/cache”, prepare=True,

obtain=True, rework=rework)

trainloader = DataLoader(trainset, batch_size=64, shuffle=True)

# Outline loss perform and optimizer

internet = Internet()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(internet.parameters(), lr=0.01)

# Practice the community

for epoch in vary(2): # loop over the dataset a number of instances

running_loss = 0.0

for i, knowledge in enumerate(trainloader, 0):

# get the inputs; knowledge is an inventory of [inputs, labels]

inputs, labels = knowledge

# zero the parameter gradients

optimizer.zero_grad()

# ahead + backward + optimize

outputs = internet(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.merchandise()

if i % 200 == 199: # print each 200 mini-batches

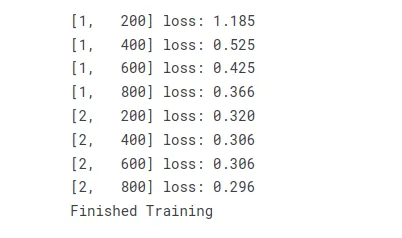

print(“[%d, %5d] loss: %.3f” %

(epoch + 1, i + 1, running_loss / 200))

running_loss = 0.0

print(“Completed Coaching”)

# Specify quantization configuration

internet.qconfig = torch.ao.quantization.get_default_qconfig(“onednn”)

# Put together the mannequin for static quantization.

net_prepared = torch.quantization.put together(internet)

# Now we convert the mannequin to a quantized model.

net_quantized = torch.quantization.convert(net_prepared)

buf = io.BytesIO()

torch.save(internet.state_dict(), buf)

size_original = sys.getsizeof(buf.getvalue())

buf = io.BytesIO()

torch.save(net_quantized.state_dict(), buf)

size_quantized = sys.getsizeof(buf.getvalue())

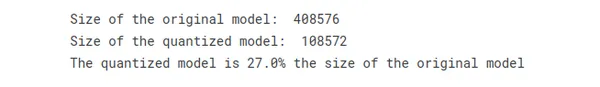

print(“Measurement of the unique mannequin: “, size_original)

print(“Measurement of the quantized mannequin: “, size_quantized)

print(f”The quantized mannequin is {np.spherical(100.*(size_quantized )/size_original)}% the dimensions of the unique mannequin”)

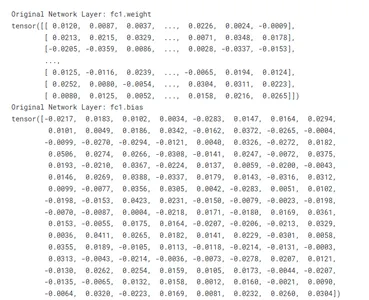

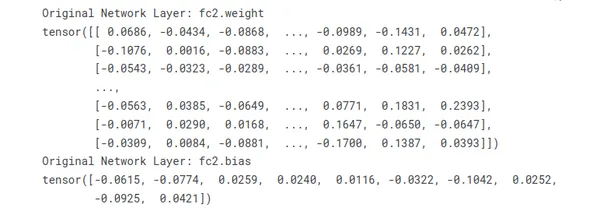

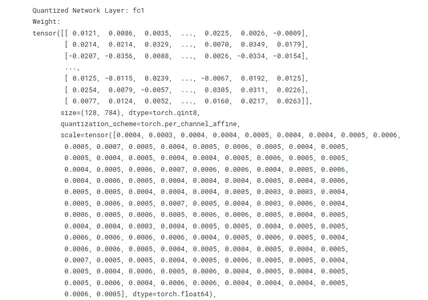

# Print out the weights of the unique community

for title, param in internet.named_parameters():

print(“Unique Community Layer:”, title)

print(param.knowledge)

# Print out the weights of the quantized community

for title, module in net_quantized.named_modules():

if isinstance(module, nn.quantized.Linear):

print(“Quantized Community Layer:”, title)

print(“Weight:”)

print(module.weight())

print(“Bias:”)

print(module.bias)

Evaluating a Quantized and Non-Quantized Mannequin

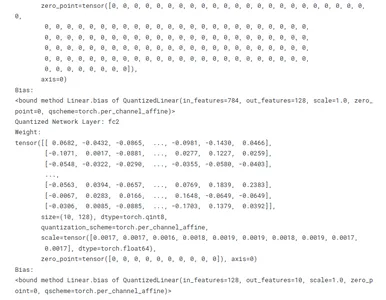

The under instance exhibits how the quantized mannequin can be utilized in the identical manner as the unique mannequin. It additionally demonstrates the trade-off between precision and reminiscence utilization/computation pace that comes with quantization. The quantized mannequin makes use of much less reminiscence and is quicker to compute, however the outputs usually are not the identical as the unique mannequin as a result of quantization error.

Here’s a abstract of the small print and a comparability with the unique mannequin:

Tensor Values: Within the quantized mannequin, these are quantized values of the weights and biases, in comparison with the unique mannequin which shops these in floating level precision. These values areused within the computations carried out by the layer, and so they instantly have an effect on the layer’s output.

Measurement: That is the form of the burden or bias tensor and it needs to be the identical in each the unique and quantized mannequin. In a totally related layer, which corresponds to the variety of neurons within the present layer and the variety of neurons within the earlier layer.

Dtype: Within the authentic mannequin, the info kind of the tensor values is often torch.float32 (32-bit floating level), whereas within the quantized mannequin, it’s a quantized knowledge kind like torch.qint8 (8-bit quantized integer). This reduces the reminiscence utilization and computational necessities of the mannequin.

Quantization_scheme: That is particular to the quantized mannequin. It’s the kind of quantization used, for instance, torch.per_channel_affine means completely different channels (e.g., neurons in a layer) can have a distinct scale and zero_point values.

Scale & Zero Level: These are parameters of the quantization course of and are particular to the quantized mannequin. They’re used to transform between the quantized and dequantized types of thetensor values.

Axis: This means the dimension alongside which the quantization parameters fluctuate. That is additionally particular to the quantized mannequin.

Requires_grad: This means whether or not the tensor is a mannequin parameter that’s up to date throughout coaching. It needs to be the identical in each the unique and quantized fashions.

# Suppose now we have some enter knowledge

input_data = torch.randn(1, 28 * 28)

# We are able to cross this knowledge by each the unique and quantized fashions

output_original = internet(input_data)

output_quantized = net_quantized(input_data)

# The outputs needs to be comparable, as a result of the quantized mannequin is a lower-precision

# approximation of the unique mannequin. Nevertheless, they will not be precisely the identical

# due to the quantization course of.

print(“Output from authentic mannequin:”, output_original.knowledge)

print(“Output from quantized mannequin:”, output_quantized.knowledge)

# The distinction between the outputs is a sign of the “quantization error”,

# which is the error launched by the quantization course of.

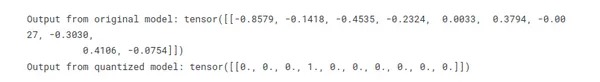

quantization_error = (output_original – output_quantized).abs().imply()

print(“Quantization error:”, quantization_error)

# The weights of the unique mannequin are saved in floating level precision, so that they

# take up extra reminiscence than the quantized weights. We are able to test this utilizing the

# `element_size` methodology, which returns the dimensions in bytes of 1 aspect of the tensor.

print(f”Measurement of 1 weight in authentic mannequin: {internet.fc1.weight.element_size()} bytes (32bit)”)

print(f”Measurement of 1 weight in quantized mannequin: {net_quantized.fc1.weight().element_size()} byte (8bit)”)

Conclusion

Mannequin quantization is the method of creating sensible packages on our computer systems extra compact and faster, permitting them to perform correctly even on smaller machines. It’s like remodeling your laptop’s mind right into a quicker, extra environment friendly assistant!

As the sector of machine studying continues to evolve, the efficient use of quantization methods stays essential for enabling the deployment of environment friendly and high-performance fashions throughout quite a lot of platforms, from edge gadgets to resource-constrained environments.

Key Takeaways

Quantization methods can be utilized to cut back the precision of deep studying fashions and enhance their effectivity.

There are a number of approaches to quantization, together with post-training quantization and quantization-aware coaching.

Greatest practices for quantization embody selecting the suitable precision, utilizing a consultant dataset, fine-tuning the mannequin, and monitoring the accuracy.

The steadiness between accuracy and effectivity will rely on the precise traits of the mannequin and the duty at hand.

Ceaselessly Requested Questions

A. Quantization is the method of decreasing the variety of bits wanted to signify knowledge, and in machine studying, it’s usually used to cut back the precision of weights and activations in neural networks for extra environment friendly deployment.

A. Quantization is essential because it reduces the reminiscence footprint and computational necessities of fashions, making them extra appropriate for deployment on resource-constrained gadgets resembling cellphones or edge gadgets.

A. Weight quantization includes decreasing the precision of the mannequin’s weights. Binary quantization particularly units weights to both -1 or 1, drastically decreasing the variety of bits wanted to signify every weight.

A. Fastened-point quantization assigns a set variety of bits to signify activations, whereas dynamic quantization adapts the precision primarily based on the enter distribution throughout runtime, providing extra flexibility.

A. Publish-training quantization includes quantizing a pre-trained mannequin after coaching is accomplished. It is not uncommon as a result of it permits for the usage of pre-existing fashions in resource-constrained environments.

The media proven on this article is just not owned by Analytics Vidhya and is used on the Writer’s discretion.

Associated

[ad_2]

Source link